a997c9720844894b9119be2d6ea8dd6fa057c143,samples/dqn_expreplay.py,,,#,16

Before Change

q_vals = q_vals.cuda()

l = loss_fn(model(states), q_vals)

losses.append(l.data[0])

mean_q.append(q_vals.mean().data[0])

l.backward()

optimizer.step()

After Change

q_vals.append(train_q)

return torch.from_numpy(np.array(states, dtype=np.float32)), torch.stack(q_vals)

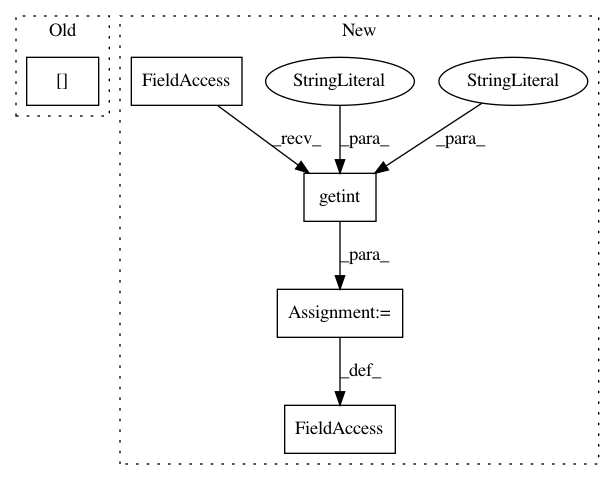

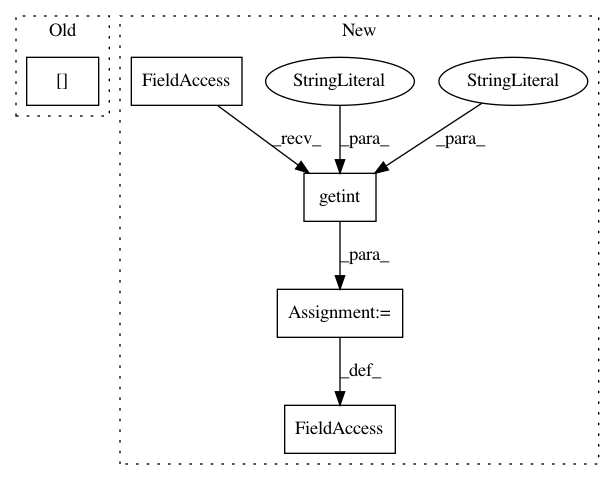

reward_sma = utils.SMAQueue(run.getint("stop", "mean_games", fallback=100))

for idx in range(10000):

exp_replay.populate(run.getint("exp_buffer", "populate"))

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: Shmuma/ptan

Commit Name: a997c9720844894b9119be2d6ea8dd6fa057c143

Time: 2017-05-03

Author: maxl@fornova.net

File Name: samples/dqn_expreplay.py

Class Name:

Method Name:

Project Name: PyMVPA/PyMVPA

Commit Name: 378f02bf9cd59fa2609ce3339be5885599ae1fac

Time: 2008-06-23

Author: michael.hanke@gmail.com

File Name: mvpa/base/__init__.py

Class Name:

Method Name:

Project Name: Shmuma/ptan

Commit Name: 584d38348bfe5246ff0d128bb23f1355560788db

Time: 2017-05-21

Author: max.lapan@gmail.com

File Name: samples/dqn_tweaks_atari.py

Class Name:

Method Name: