20ca9d882e448df5f1a9c5c0d74772763651826a,thinc/model.py,Model,use_params,#Model#Any#,296

Before Change

yield

if backup is not None:

copy_array(dst=self._mem.weights, src=backup)

for i, context in enumerate(contexts):

// This is ridiculous, but apparently it"s what you

// have to do to make this work across Python 2/3?

try:

next(context.gen)

except StopIteration:

pass

def walk(self) -> Iterable["Model"]:

Iterate out layers of the model, breadth-first.

queue = [self]

seen: Set[int] = set()

After Change

param = params[self.id]

backup = weights.copy()

copy_array(dst=weights, src=param)

with contextlib.ExitStack() as stack:

for layer in self.layers:

stack.enter_context(layer.use_params(params))

for shim in self.shims:

stack.enter_context(shim.use_params(params))

yield

if backup is not None:

copy_array(dst=self._mem.weights, src=backup)

def walk(self) -> Iterable["Model"]:

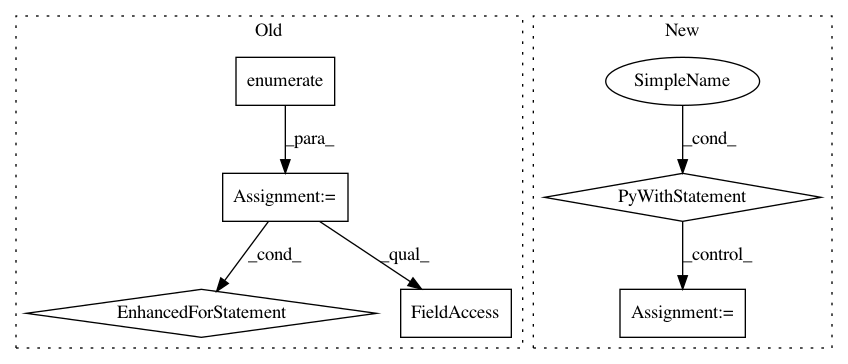

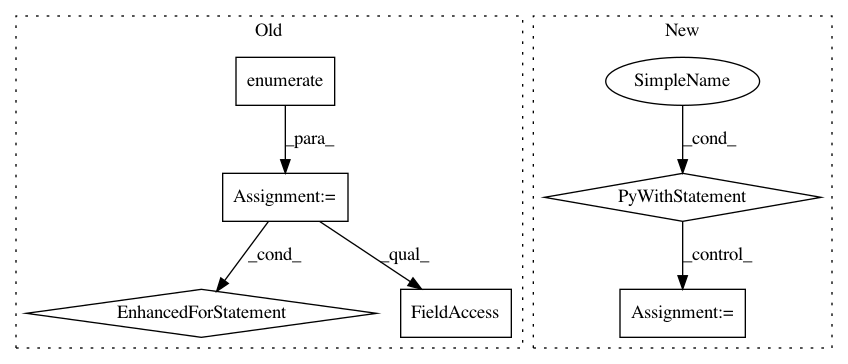

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: explosion/thinc

Commit Name: 20ca9d882e448df5f1a9c5c0d74772763651826a

Time: 2020-01-04

Author: honnibal+gh@gmail.com

File Name: thinc/model.py

Class Name: Model

Method Name: use_params

Project Name: nipy/dipy

Commit Name: a17b669606cdc5c16fb823b5f00abcacf6a68d70

Time: 2012-10-24

Author: mrbago@gmail.com

File Name: dipy/reconst/dti.py

Class Name: TensorFit

Method Name: odf

Project Name: cornellius-gp/gpytorch

Commit Name: 303217b34070dc47a86622b62764098999b0d7f5

Time: 2018-12-12

Author: gpleiss@gmail.com

File Name: gpytorch/lazy/lazy_tensor.py

Class Name: LazyTensor

Method Name: _quad_form_derivative