24357bb63d8338158ddb7fefaf35ca4bd7064f31,train.py,,train_epoch,#Any#Any#Any#Any#Any#,39

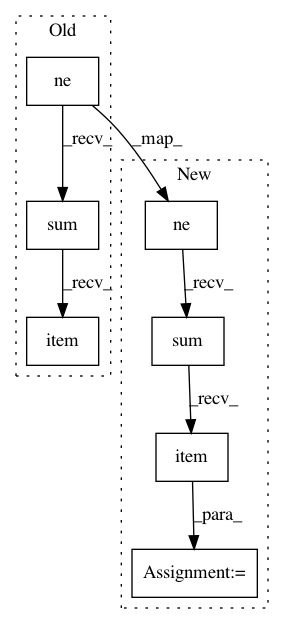

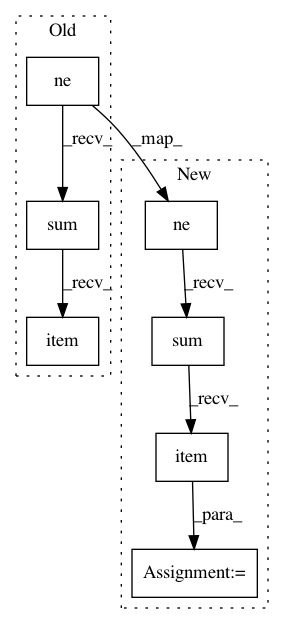

Before Change

optimizer.update_learning_rate()

// note keeping

n_words = gold.data.ne(Constants.PAD).sum().item()

n_total_words += n_words

n_total_correct += n_correct

total_loss += loss.item()

After Change

// note keeping

total_loss += loss.item()

msk_non_pad = gold.ne(Constants.PAD)

n_word = msk_non_pad.sum().item()

n_word_total += n_word

n_word_correct += n_correct

loss_per_word = total_loss/n_word_total

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 7

Instances

Project Name: jadore801120/attention-is-all-you-need-pytorch

Commit Name: 24357bb63d8338158ddb7fefaf35ca4bd7064f31

Time: 2018-08-22

Author: yhhuang@nlg.csie.ntu.edu.tw

File Name: train.py

Class Name:

Method Name: train_epoch

Project Name: jadore801120/attention-is-all-you-need-pytorch

Commit Name: 24357bb63d8338158ddb7fefaf35ca4bd7064f31

Time: 2018-08-22

Author: yhhuang@nlg.csie.ntu.edu.tw

File Name: train.py

Class Name:

Method Name: eval_epoch

Project Name: elbayadm/attn2d

Commit Name: 718677ebb044e27aaf1a30640c2f7ab6b8fa8509

Time: 2019-09-18

Author: namangoyal@learnfair0893.h2.fair

File Name: fairseq/criterions/masked_lm.py

Class Name: MaskedLmLoss

Method Name: forward