d35d95925d72f4bf83d4eaeb66ec3e93a1b3a663,src/garage/tf/optimizers/first_order_optimizer.py,FirstOrderOptimizer,__init__,#FirstOrderOptimizer#Any#Any#Any#Any#Any#Any#Any#Any#,32

Before Change

if tf_optimizer_cls is None:

tf_optimizer_cls = tf.compat.v1.train.AdamOptimizer

if tf_optimizer_args is None:

tf_optimizer_args = dict(learning_rate=1e-3)

self._tf_optimizer = tf_optimizer_cls(**tf_optimizer_args)

self._max_epochs = max_epochs

self._tolerance = tolerance

self._batch_size = batch_size

After Change

if optimizer is None:

optimizer = tf.compat.v1.train.AdamOptimizer

learning_rate = learning_rate or dict(learning_rate=_Default(1e-3))

if not isinstance(learning_rate, dict):

learning_rate = dict(learning_rate=learning_rate)

self._tf_optimizer = optimizer

self._learning_rate = learning_rate

self._max_epochs = max_epochs

self._tolerance = tolerance

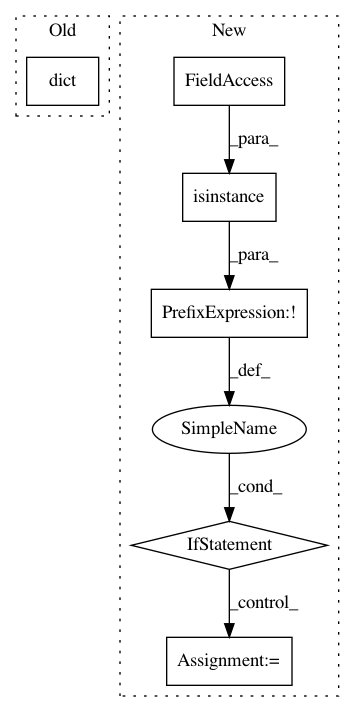

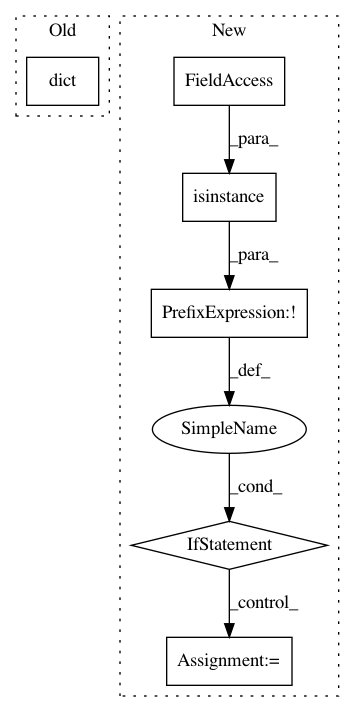

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: rlworkgroup/garage

Commit Name: d35d95925d72f4bf83d4eaeb66ec3e93a1b3a663

Time: 2020-06-06

Author: chuiyili@usc.edu

File Name: src/garage/tf/optimizers/first_order_optimizer.py

Class Name: FirstOrderOptimizer

Method Name: __init__

Project Name: rlworkgroup/garage

Commit Name: e4b6611cb73ef7658f028831be1aa6bd85ecbed0

Time: 2020-08-14

Author: 38871737+avnishn@users.noreply.github.com

File Name: src/garage/torch/policies/deterministic_mlp_policy.py

Class Name: DeterministicMLPPolicy

Method Name: get_action

Project Name: deepgram/kur

Commit Name: 7e2d3f6c0ce76c3b77d771b706c083aeab5ccddc

Time: 2017-04-11

Author: ajsyp@syptech.net

File Name: kur/kurfile.py

Class Name: Kurfile

Method Name: get_optimizer