3bc7e38ed98f3b7a13fcee2726ec38b27e5c4e1b,onmt/Models.py,Encoder,__init__,#Encoder#Any#Any#Any#,36

Before Change

bidirectional=opt.brnn)

self.multiattn = nn.ModuleList([onmt.modules.MultiHeadedAttention(8, self.hidden_size) for _ in range(self.layers)])

self.linear_out = nn.ModuleList([BLinear(self.hidden_size, 2*self.hidden_size) for _ in range(self.layers)])

self.linear_final = nn.ModuleList([BLinear(2*self.hidden_size, self.hidden_size) for _ in range(self.layers)])

self.layer_norm = nn.ModuleList([BLayerNorm(self.hidden_size) for _ in range(self.layers)])

After Change

if self.positional_encoding:

self.pe = make_positional_encodings(opt.word_vec_size, 5000).cuda()

if self.encoder_layer == "transformer":

self.transformer = nn.ModuleList([TransformerEncoder(self.hidden_size, opt)

for _ in range(opt.layers)])

def load_pretrained_vectors(self, opt):

if opt.pre_word_vecs_enc is not None:

pretrained = torch.load(opt.pre_word_vecs_enc)

self.word_lut.weight.data.copy_(pretrained)

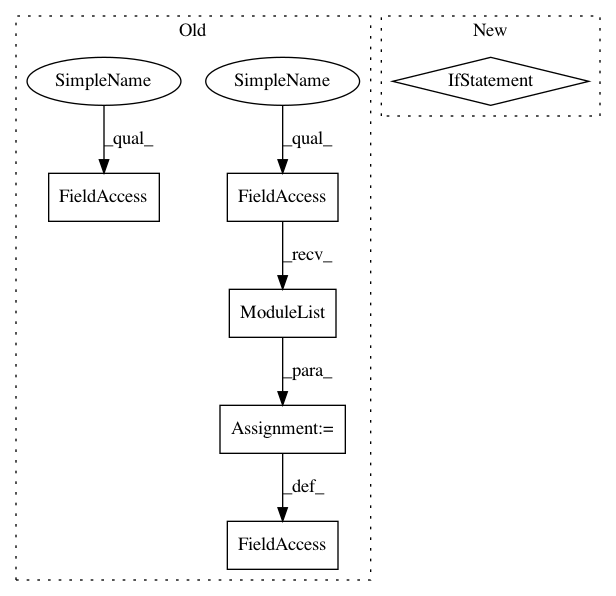

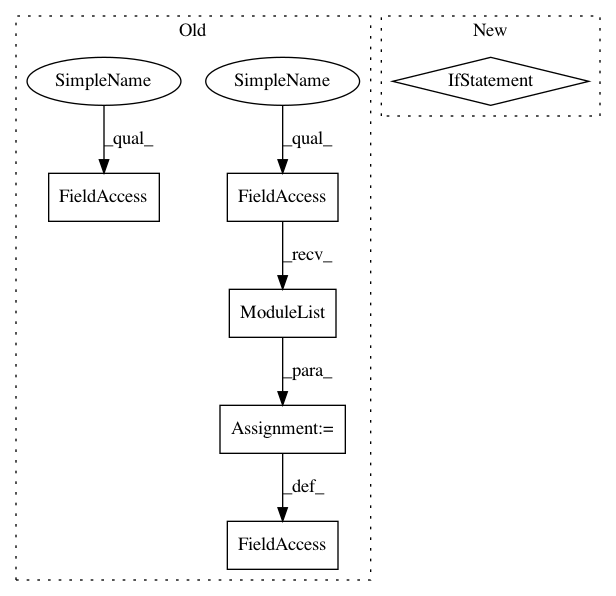

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: OpenNMT/OpenNMT-py

Commit Name: 3bc7e38ed98f3b7a13fcee2726ec38b27e5c4e1b

Time: 2017-06-23

Author: srush@sum1gpu02.rc.fas.harvard.edu

File Name: onmt/Models.py

Class Name: Encoder

Method Name: __init__

Project Name: dpressel/mead-baseline

Commit Name: 4d75253f817053abf2eb7bd909b5d0389b82814d

Time: 2021-03-02

Author: dpressel@gmail.com

File Name: layers/eight_mile/pytorch/layers.py

Class Name: TransformerEncoderStack

Method Name: __init__

Project Name: OpenNMT/OpenNMT-py

Commit Name: 3bc7e38ed98f3b7a13fcee2726ec38b27e5c4e1b

Time: 2017-06-23

Author: srush@sum1gpu02.rc.fas.harvard.edu

File Name: onmt/Models.py

Class Name: Encoder

Method Name: __init__

Project Name: NVIDIA/waveglow

Commit Name: d19cc9dd253484c12e09e3b9a07479c9bcf9b62f

Time: 2018-11-15

Author: rafaelvalle@nvidia.com

File Name: glow_old.py

Class Name: WN

Method Name: __init__