70a188776f7470c838dd22b1636462b75573a734,src/gluonnlp/models/roberta.py,RobertaEncoder,__init__,#RobertaEncoder#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#,307

Before Change

self.activation = activation

self.dtype = dtype

self.return_all_hiddens = return_all_hiddens

with self.name_scope():

self.all_layers = nn.HybridSequential(prefix="layers_")

with self.all_layers.name_scope():

for layer_idx in range(self.num_layers):

self.all_layers.add(

TransformerEncoderLayer(

units=self.units,

hidden_size=self.hidden_size,

num_heads=self.num_heads,

attention_dropout_prob=self.attention_dropout_prob,

hidden_dropout_prob=self.hidden_dropout_prob,

layer_norm_eps=self.layer_norm_eps,

weight_initializer=weight_initializer,

bias_initializer=bias_initializer,

activation=self.activation,

dtype=self.dtype,

prefix="{}_".format(layer_idx)

)

)

def hybrid_forward(self, F, x, valid_length):

atten_mask = gen_self_attn_mask(F, x, valid_length,

dtype=self.dtype, attn_type="full")

inner_states = [x]

After Change

embed_initializer=TruncNorm(stdev=0.02),

weight_initializer=TruncNorm(stdev=0.02),

bias_initializer="zeros",

dtype="float32",

use_pooler=False,

use_mlm=True,

untie_weight=False,

encoder_normalize_before=True,

return_all_hiddens=False):

Parameters

----------

vocab_size

units

hidden_size

num_layers

num_heads

max_length

hidden_dropout_prob

attention_dropout_prob

pos_embed_type

activation

pooler_activation

layer_norm_eps

embed_initializer

weight_initializer

bias_initializer

dtype

use_pooler

Whether to use classification head

use_mlm

Whether to use lm head, if False, forward return hidden states only

untie_weight

Whether to untie weights between embeddings and classifiers

encoder_normalize_before

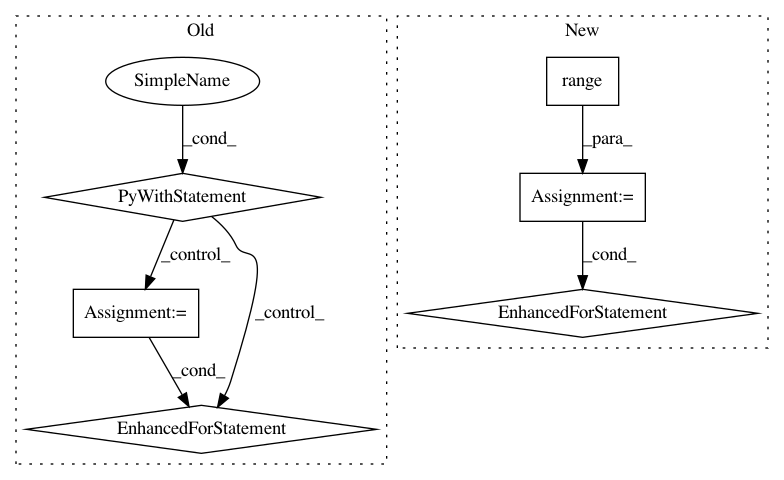

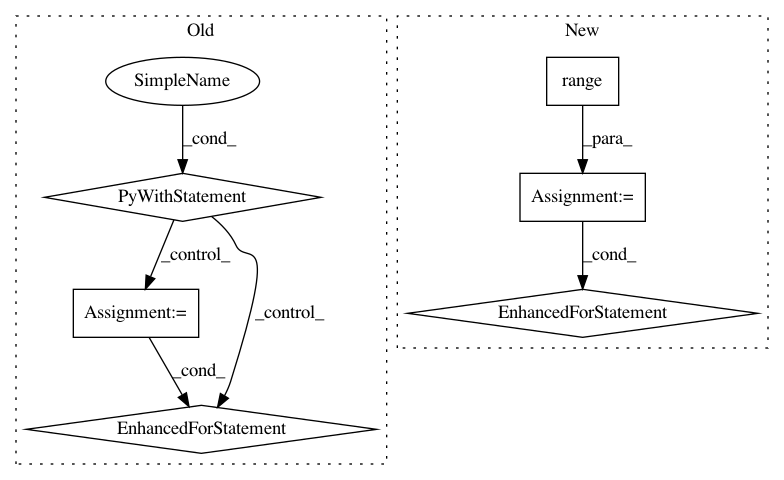

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 6

Instances

Project Name: dmlc/gluon-nlp

Commit Name: 70a188776f7470c838dd22b1636462b75573a734

Time: 2020-07-16

Author: lausen@amazon.com

File Name: src/gluonnlp/models/roberta.py

Class Name: RobertaEncoder

Method Name: __init__

Project Name: dmlc/gluon-nlp

Commit Name: 70a188776f7470c838dd22b1636462b75573a734

Time: 2020-07-16

Author: lausen@amazon.com

File Name: src/gluonnlp/models/bert.py

Class Name: BertTransformer

Method Name: __init__

Project Name: dmlc/gluon-nlp

Commit Name: 090944e816fd3ff8e861fba4452851e0a901491d

Time: 2019-01-28

Author: linhaibin.eric@gmail.com

File Name: scripts/language_model/large_word_language_model.py

Class Name:

Method Name: train

Project Name: deepchem/deepchem

Commit Name: 1330ea3102315bd79c9c6efdbd8818c8e2a3cb8f

Time: 2019-07-09

Author: peastman@stanford.edu

File Name: deepchem/metalearning/maml.py

Class Name: MAML

Method Name: fit