ef2e9106b17ea0d8bb56cee2ce7442ca4971afae,basic/model.py,Model,_build_forward,#Model#,50

Before Change

qqc = tf.reshape(tf.reduce_max(tf.nn.relu(qqc), 2), [-1, JQ, d])

with tf.variable_scope("word_emb"):

if config.finetune:

if config.mode == "train":

word_emb_mat = tf.get_variable("word_emb_mat", dtype="float", shape=[VW, config.word_emb_size], initializer=get_initializer(config.emb_mat))

else:

word_emb_mat = tf.get_variable("word_emb_mat", shape=[VW, config.word_emb_size], dtype="float")

else:

word_emb_mat = config.emb_mat.astype("float32")

Ax = tf.nn.embedding_lookup(word_emb_mat, self.x) // [N, M, JX, d]

Aq = tf.nn.embedding_lookup(word_emb_mat, self.q) // [N, JQ, d]

Ax = linear([Ax], d, False, scope="Ax_reshape", wd=config.wd, input_keep_prob=config.input_keep_prob,

is_train=self.is_train)

After Change

with tf.variable_scope("main"):

u = tf.tile(tf.expand_dims(tf.expand_dims(u, 1), 1), [1, M, JX, 1])

g0 = tf.concat(3, [h, u, h*u, tf.abs(h-u)])

(fw_g1, bw_g1), _ = bidirectional_dynamic_rnn(cell, cell, g0, x_len, dtype="float", scope="h1") // [N, M, JX, 2d]

g1 = tf.concat(3, [fw_g1, bw_g1])

(fw_g2, bw_g2), _ = bidirectional_dynamic_rnn(cell, cell, g1, x_len, dtype="float", scope="h2") // [N, M, JX, 2d]

g2 = tf.concat(3, [fw_g2, bw_g2])

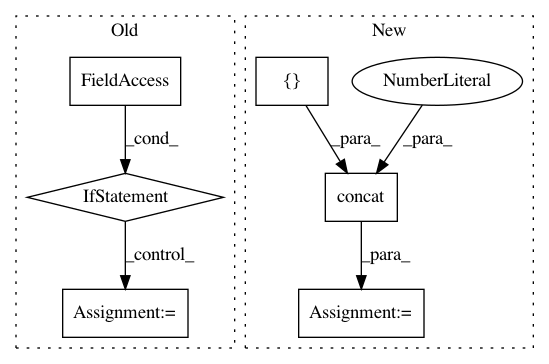

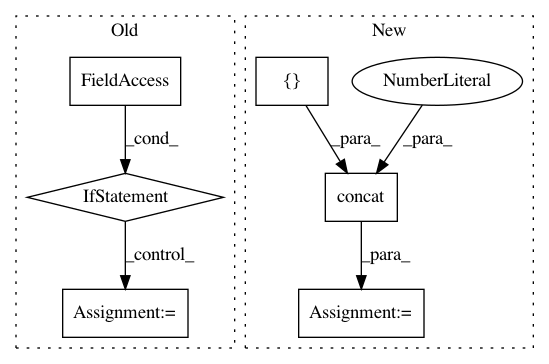

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: wenwei202/iss-rnns

Commit Name: ef2e9106b17ea0d8bb56cee2ce7442ca4971afae

Time: 2016-09-04

Author: seominjoon@gmail.com

File Name: basic/model.py

Class Name: Model

Method Name: _build_forward

Project Name: wenwei202/iss-rnns

Commit Name: 6c4e91a811ad9e996579f99eec9b9e6f68db72dd

Time: 2016-09-13

Author: seominjoon@gmail.com

File Name: basic/model.py

Class Name: Model

Method Name: _build_forward

Project Name: wenwei202/iss-rnns

Commit Name: 48c7953c92a3004cb07b87c47e7a5ca2a9b2c83e

Time: 2016-08-22

Author: seominjoon@gmail.com

File Name: basic/model.py

Class Name: Model

Method Name: _build_forward