4887ef8baecbf5315ec0f235e56a4f93cd05aad7,cleverhans/attacks_tf.py,,spm,#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#,1947

Before Change

transforms = zip(sampled_dxs, sampled_dys, sampled_angles)

adv_xs = []

accs = []

// Perform the transformation

for (dx, dy, angle) in transforms:

adv_xs.append(_apply_transformation(x, dx, dy, angle, batch_size))

preds_adv = model.get_logits(adv_xs[-1])

// Compute accuracy

accs.append(tf.count_nonzero(tf.equal(tf.argmax(y, axis=-1),

tf.argmax(preds_adv, axis=-1))))

// Return the adv_x with worst accuracy

adv_xs = tf.stack(adv_xs)

accs = tf.stack(accs)

return tf.gather(adv_xs, tf.argmin(accs))

After Change

// Return the adv_x with worst accuracy

// all_xents is n_total_samples x batch_size (SB)

all_xents = tf.stack(all_xents) // SB

// We want the worst case sample, with the largest xent_loss

worst_sample_idx = tf.argmax(all_xents, axis=0) // B

batch_size = tf.shape(x)[0]

keys = tf.stack([

tf.range(batch_size, dtype=tf.int32),

tf.cast(worst_sample_idx, tf.int32)

], axis=1)

transformed_ims_bshwc = tf.einsum("sbhwc->bshwc", transformed_ims)

after_lookup = tf.gather_nd(transformed_ims_bshwc, keys) // BHWC

return after_lookup

def parallel_apply_transformations(x, transforms, black_border_size=0):

transforms = tf.convert_to_tensor(transforms, dtype=tf.float32)

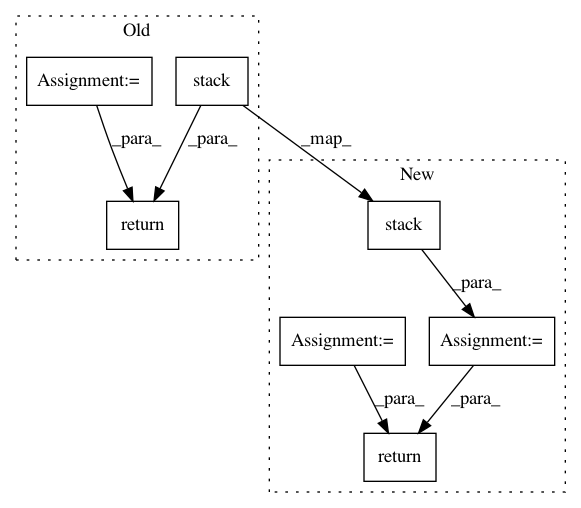

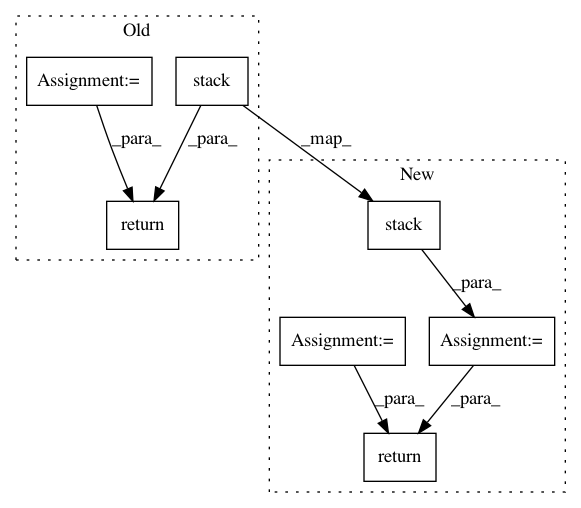

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: tensorflow/cleverhans

Commit Name: 4887ef8baecbf5315ec0f235e56a4f93cd05aad7

Time: 2018-10-04

Author: nottombrown@gmail.com

File Name: cleverhans/attacks_tf.py

Class Name:

Method Name: spm

Project Name: pyprob/pyprob

Commit Name: b69abe85c319c7166ca0e156401df942a03a8d38

Time: 2018-02-10

Author: atilimgunes.baydin@gmail.com

File Name: pyprob/util.py

Class Name:

Method Name: to_variable

Project Name: pytorch/text

Commit Name: cb287ae46f67324fbd4eb0a6a919673344ac1017

Time: 2018-04-08

Author: yinpenghhz@hotmail.com

File Name: torchtext/data/field.py

Class Name: NestedField

Method Name: numericalize