c84c714c64f85c01c2b748fd035a19e6e9154648,allennlp/modules/seq2seq_encoders/multi_head_self_attention.py,MultiHeadSelfAttention,forward,#MultiHeadSelfAttention#Any#Any#,78

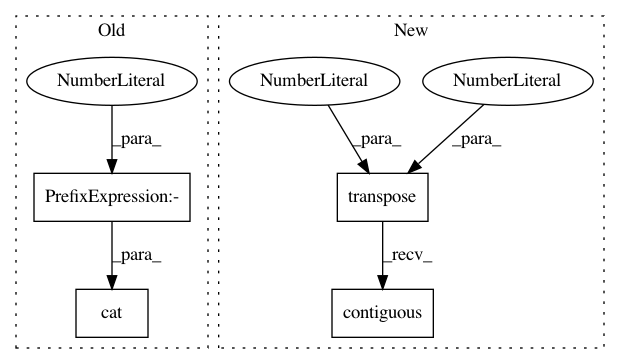

Before Change

// Note that we _cannot_ use a reshape here, because this tensor was created

// with num_heads being the first dimension, so reshaping naively would not

// throw an error, but give an incorrect result.

outputs = torch.cat(torch.split(outputs, batch_size, dim=0), dim=-1)

// Project back to original input size.

// shape (batch_size, timesteps, input_size)

outputs = self._output_projection(outputs)After Change

// shape (batch_size, num_heads, timesteps, values_dim/num_heads)

outputs = outputs.view(batch_size, num_heads, timesteps, int(self._values_dim / num_heads))

// shape (batch_size, timesteps, num_heads, values_dim/num_heads)

outputs = outputs.transpose(1, 2).contiguous()

// shape (batch_size, timesteps, values_dim)

outputs = outputs.view(batch_size, timesteps, self._values_dim)

// Project back to original input size.In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 4

Instances Project Name: allenai/allennlp

Commit Name: c84c714c64f85c01c2b748fd035a19e6e9154648

Time: 2018-03-05

Author: markn@allenai.org

File Name: allennlp/modules/seq2seq_encoders/multi_head_self_attention.py

Class Name: MultiHeadSelfAttention

Method Name: forward

Project Name: erikwijmans/Pointnet2_PyTorch

Commit Name: 65a127f3d23527711259b6cdcce4e946b876f49c

Time: 2018-02-10

Author: ewijmans2@gmail.com

File Name: models/Pointnet2SemSeg.py

Class Name: Pointnet2SSG

Method Name: forward

Project Name: erikwijmans/Pointnet2_PyTorch

Commit Name: 65a127f3d23527711259b6cdcce4e946b876f49c

Time: 2018-02-10

Author: ewijmans2@gmail.com

File Name: models/Pointnet2SemSeg.py

Class Name: Pointnet2MSG

Method Name: forward