16d2eb3061f1bed8ade390c5e2a2c1de9daa3509,theanolm/optimizers/basicoptimizer.py,BasicOptimizer,__init__,#BasicOptimizer#Any#Any#Any#,16

Before Change

gradients = tensor.grad(cost, wrt=list(self.network.params.values()))

// Normalize the norm of the gradients to given maximum value.

if "max_gradient_norm" in optimization_options:

max_norm = optimization_options["max_gradient_norm"]

epsilon = optimization_options["epsilon"]

squares = [tensor.sqr(gradient) for gradient in gradients]

sums = [tensor.sum(square) for square in squares]

total_sum = sum(sums) // sum over parameter variables

norm = tensor.sqrt(total_sum)

target_norm = tensor.clip(norm, 0.0, max_norm)

gradients = [gradient * target_norm / (epsilon + norm)

for gradient in gradients]

self._gradient_exprs = gradients

self.gradient_update_function = \

theano.function([self.network.minibatch_input,

self.network.minibatch_mask],After Change

// Create Theano shared variables from the initial parameter values.

self.params = {name: theano.shared(value, name)

for name, value in self.param_init_values.items() }

// numerical stability / smoothing term to prevent divide-by-zero

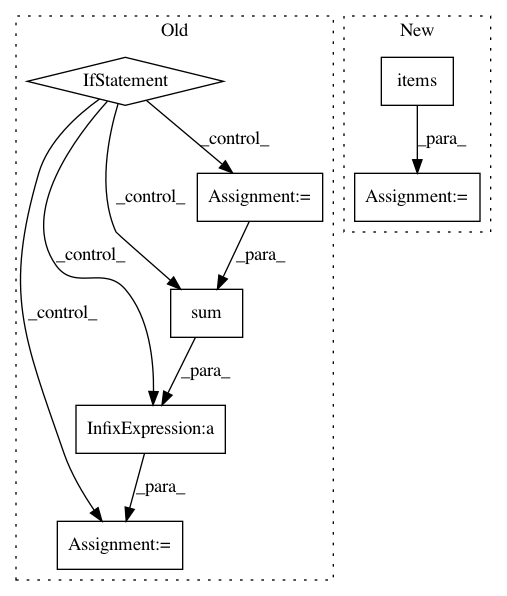

if not "epsilon" in optimization_options:In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances Project Name: senarvi/theanolm

Commit Name: 16d2eb3061f1bed8ade390c5e2a2c1de9daa3509

Time: 2015-12-04

Author: seppo.git@marjaniemi.com

File Name: theanolm/optimizers/basicoptimizer.py

Class Name: BasicOptimizer

Method Name: __init__

Project Name: deepchem/deepchem

Commit Name: f4bc57459e575c7111f50a2744c8054d3d43f0d5

Time: 2020-07-02

Author: bharath@Bharaths-MBP.zyxel.com

File Name: deepchem/hyper/gaussian_process.py

Class Name: GaussianProcessHyperparamOpt

Method Name: hyperparam_search

Project Name: chainer/chainercv

Commit Name: d37d08d9b2a806b3345fba41711c0d517b92a65c

Time: 2017-06-15

Author: yuyuniitani@gmail.com

File Name: chainercv/links/model/vgg/vgg16.py

Class Name: VGG16Layers

Method Name: predict