c2165224d198450a3b4329ae099a772aa65d51c5,fairseq/models/levenshtein_transformer.py,LevenshteinTransformerModel,forward_decoder,#LevenshteinTransformerModel#Any#Any#Any#Any#,318

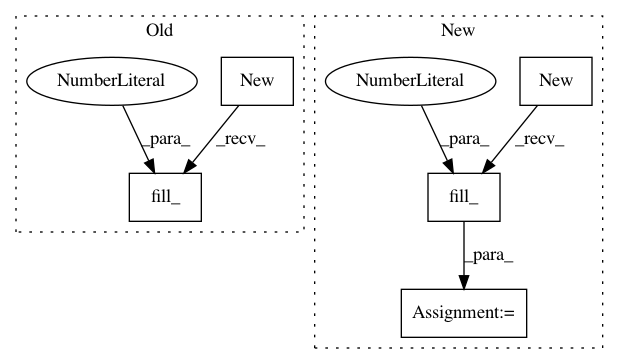

Before Change

attn = decoder_out["attn"]

if max_ratio is None:

max_lens = output_tokens.new(output_tokens.size(0)).fill_(255)

else:

max_lens = (

(~encoder_out["encoder_padding_mask"]).sum(1) * max_ratio

).clamp(min=10)

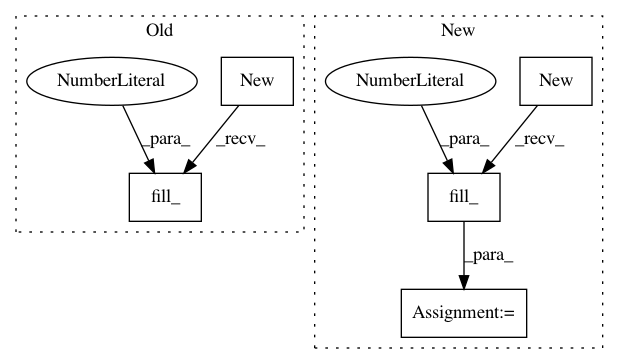

After Change

bsz = output_tokens.size(0)

if max_ratio is None:

max_lens = output_tokens.new().fill_(255)

else:

if encoder_out["encoder_padding_mask"] is None:

max_src_len = encoder_out["encoder_out"].size(1)

src_lens = encoder_out["encoder_out"].new(bsz).fill_(max_src_len)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: pytorch/fairseq

Commit Name: c2165224d198450a3b4329ae099a772aa65d51c5

Time: 2019-10-08

Author: changhan@fb.com

File Name: fairseq/models/levenshtein_transformer.py

Class Name: LevenshteinTransformerModel

Method Name: forward_decoder

Project Name: rusty1s/pytorch_geometric

Commit Name: cc87d55ce7787b4a46f36ef0cf53c9a75414487f

Time: 2017-11-24

Author: matthias.fey@tu-dortmund.de

File Name: torch_geometric/nn/functional/spline_conv/edgewise_spline_weighting_gpu.py

Class Name: EdgewiseSplineWeightingGPU

Method Name: backward