4725d18c4d23dfa1c598026a67c0620006a39dd9,rbm/run_rbm.py,,benchmark,#Any#Any#Any#Any#Any#Any#Any#,37

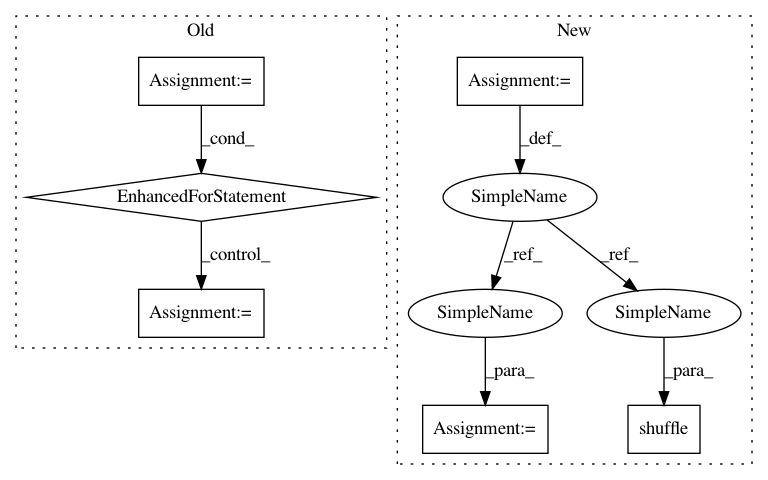

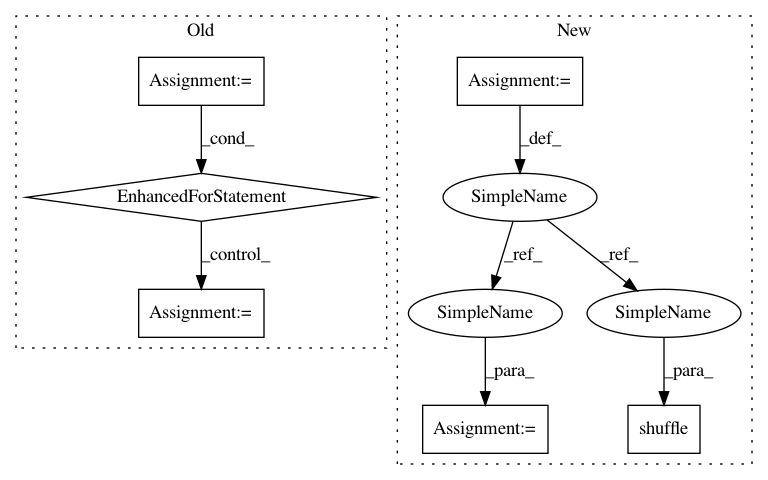

Before Change

with open(output_file, "a") as f:

writer = csv.DictWriter(f, ["L", "k", "time"])

for L in sorted(dataset_sizes):

for k_ in k:

print("L = {}; k = {}".format(L, k_))

train_set = load_train(L)

num_hidden = (train_set.shape[-1]

if num_hidden is None

else num_hidden)

rbm = RBM(num_visible=train_set.shape[-1],

num_hidden=num_hidden)

time_elapsed = -time.perf_counter()

rbm.train(train_set, epochs,

batch_size, k=k_,

lr=learning_rate,

momentum=momentum,

initial_gaussian_noise=0,

log_every=0,

progbar=False)

time_elapsed += time.perf_counter()

writer.writerow({"L": L,

"k": k_,

"time": time_elapsed})

@cli.command("train")

@click.option("--train-path", default="../data/Ising2d_L4.pkl.gz",

show_default=True, type=click.Path(exists=True),

help="path to the training data")

After Change

for f in listdir("/home/data/critical-2d-ising/")

if isdir(join("/home/data/critical-2d-ising/", f))]

runs = list(product(dataset_sizes, k))

shuffle(runs)

with open(output_file, "a") as f:

writer = csv.DictWriter(f, ["L", "k", "time"])

for L, k_ in runs:

print("L = {}; k = {}".format(L, k_))

train_set = load_train(L)

num_hidden = (train_set.shape[-1]

if num_hidden is None

else num_hidden)

rbm = RBM(num_visible=train_set.shape[-1],

num_hidden=num_hidden)

time_elapsed = -time.perf_counter()

rbm.train(train_set, epochs,

batch_size, k=k_,

lr=learning_rate,

momentum=momentum,

initial_gaussian_noise=0,

log_every=0,

progbar=False)

time_elapsed += time.perf_counter()

torch.cuda.empty_cache()

writer.writerow({"L": L,

"k": k_,

"time": time_elapsed})

@cli.command("train")

@click.option("--train-path", default="../data/Ising2d_L4.pkl.gz",

show_default=True, type=click.Path(exists=True),

help="path to the training data")

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: PIQuIL/QuCumber

Commit Name: 4725d18c4d23dfa1c598026a67c0620006a39dd9

Time: 2018-06-18

Author: emerali@users.noreply.github.com

File Name: rbm/run_rbm.py

Class Name:

Method Name: benchmark

Project Name: PIQuIL/QuCumber

Commit Name: 04c39a1f03c6984148b3a3ad0f81e5a50ef7cbaf

Time: 2018-06-11

Author: emerali@users.noreply.github.com

File Name: rbm/run_rbm.py

Class Name:

Method Name: benchmark

Project Name: danforthcenter/plantcv

Commit Name: 96c26bd09d02bb9cddbc083c75ba2ea65b5d377a

Time: 2020-07-16

Author: noahfahlgren@gmail.com

File Name: plantcv/plantcv/color_palette.py

Class Name:

Method Name: color_palette