52d69d629e9083fdd4e5ff14e2c52d727bfd9335,nala/preprocessing/tokenizers.py,NLTKTokenizer,tokenize,#NLTKTokenizer#Any#,26

Before Change

:type dataset: nala.structures.data.Dataset

for part in dataset.parts():

part.sentences = [[Token(word) for word in word_tokenize(sentence)] for sentence in part.sentences]

class TmVarTokenizer(Tokenizer):

After Change

for token_word in word_tokenize(sentence):

token_start = part.text.find(token_word, so_far)

so_far = token_start + len(token_word)

part.sentences[index].append(Token(token_word, token_start))

class TmVarTokenizer(Tokenizer):

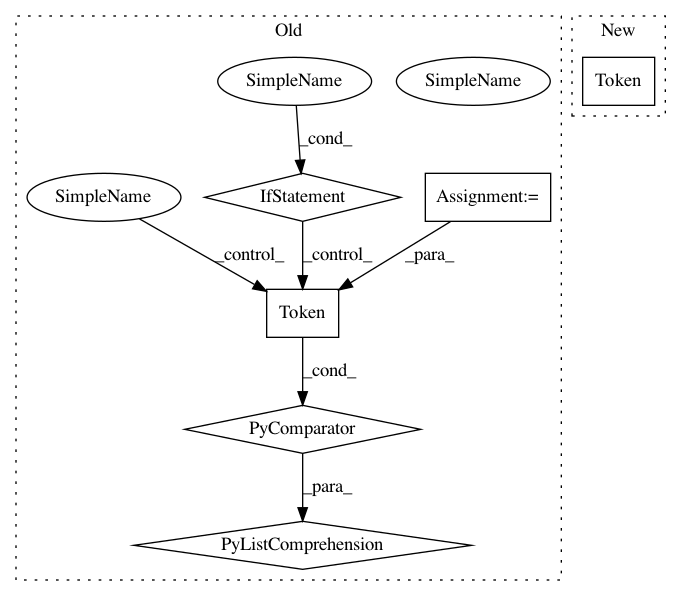

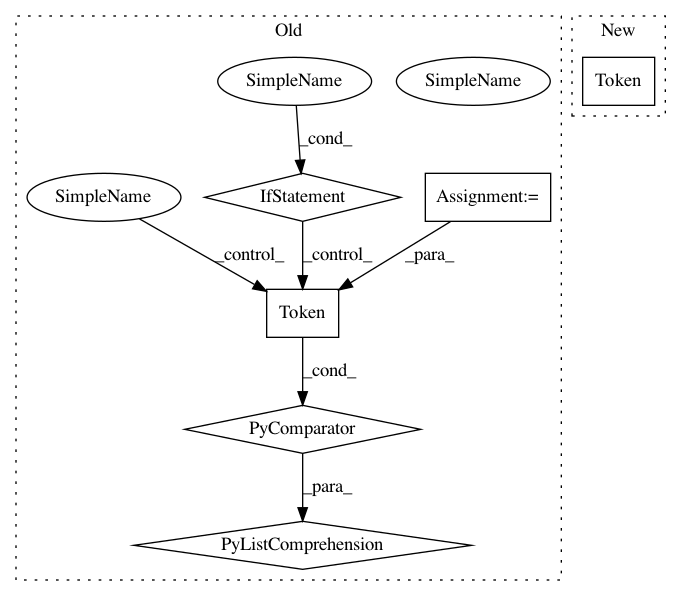

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: Rostlab/nalaf

Commit Name: 52d69d629e9083fdd4e5ff14e2c52d727bfd9335

Time: 2015-08-28

Author: aleksandar.bojchevski@gmail.com

File Name: nala/preprocessing/tokenizers.py

Class Name: NLTKTokenizer

Method Name: tokenize

Project Name: allenai/allennlp

Commit Name: f4eef6eeb5e11cc0464d01dd812b6a869405e8d0

Time: 2018-07-17

Author: joelgrus@gmail.com

File Name: allennlp/tests/data/token_indexers/elmo_indexer_test.py

Class Name: TestELMoTokenCharactersIndexer

Method Name: test_elmo_as_array_produces_token_sequence

Project Name: Rostlab/nalaf

Commit Name: 52d69d629e9083fdd4e5ff14e2c52d727bfd9335

Time: 2015-08-28

Author: aleksandar.bojchevski@gmail.com

File Name: nala/preprocessing/tokenizers.py

Class Name: TmVarTokenizer

Method Name: tokenize

Project Name: Rostlab/nalaf

Commit Name: 52d69d629e9083fdd4e5ff14e2c52d727bfd9335

Time: 2015-08-28

Author: aleksandar.bojchevski@gmail.com

File Name: nala/preprocessing/tokenizers.py

Class Name: NLTKTokenizer

Method Name: tokenize