2c4a6e537126f4123de7c97f30587310d3712c06,tests/data/tokenizers/character_tokenizer_test.py,TestCharacterTokenizer,test_splits_into_characters,#TestCharacterTokenizer#,7

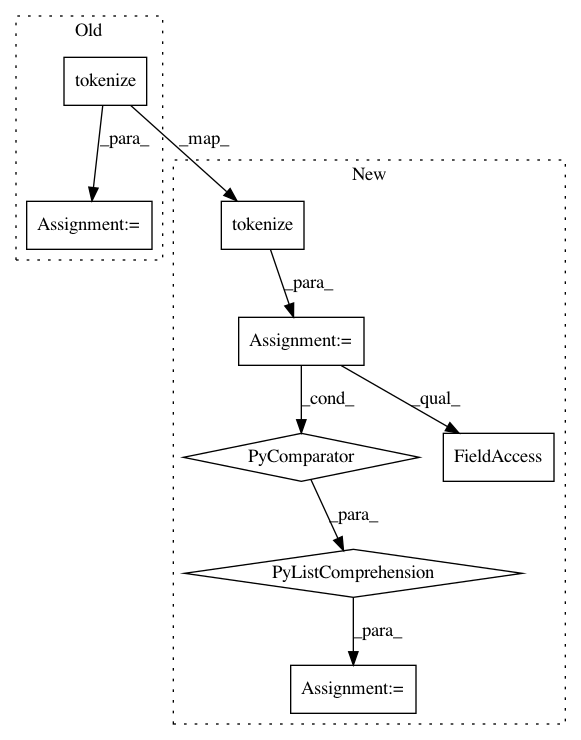

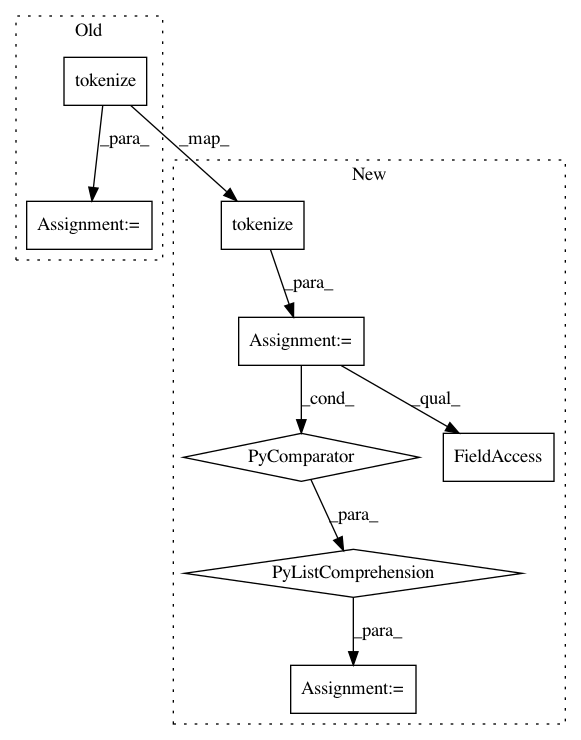

Before Change

def test_splits_into_characters(self):

tokenizer = CharacterTokenizer(start_tokens=["<S1>", "<S2>"], end_tokens=["</S2>", "</S1>"])

sentence = "A, small sentence."

tokens, _ = tokenizer.tokenize(sentence)

expected_tokens = ["<S1>", "<S2>", "A", ",", " ", "s", "m", "a", "l", "l", " ", "s", "e",

"n", "t", "e", "n", "c", "e", ".", "</S2>", "</S1>"]

assert tokens == expected_tokens

After Change

def test_splits_into_characters(self):

tokenizer = CharacterTokenizer(start_tokens=["<S1>", "<S2>"], end_tokens=["</S2>", "</S1>"])

sentence = "A, small sentence."

tokens = [t.text for t in tokenizer.tokenize(sentence)]

expected_tokens = ["<S1>", "<S2>", "A", ",", " ", "s", "m", "a", "l", "l", " ", "s", "e",

"n", "t", "e", "n", "c", "e", ".", "</S2>", "</S1>"]

assert tokens == expected_tokens

In pattern: SUPERPATTERN

Frequency: 4

Non-data size: 8

Instances

Project Name: allenai/allennlp

Commit Name: 2c4a6e537126f4123de7c97f30587310d3712c06

Time: 2017-09-13

Author: mattg@allenai.org

File Name: tests/data/tokenizers/character_tokenizer_test.py

Class Name: TestCharacterTokenizer

Method Name: test_splits_into_characters

Project Name: allenai/allennlp

Commit Name: 2c4a6e537126f4123de7c97f30587310d3712c06

Time: 2017-09-13

Author: mattg@allenai.org

File Name: tests/data/tokenizers/word_tokenizer_test.py

Class Name: TestWordTokenizer

Method Name: test_stems_and_filters_correctly

Project Name: allenai/allennlp

Commit Name: 2c4a6e537126f4123de7c97f30587310d3712c06

Time: 2017-09-13

Author: mattg@allenai.org

File Name: tests/data/tokenizers/word_tokenizer_test.py

Class Name: TestWordTokenizer

Method Name: test_passes_through_correctly

Project Name: allenai/allennlp

Commit Name: 2c4a6e537126f4123de7c97f30587310d3712c06

Time: 2017-09-13

Author: mattg@allenai.org

File Name: tests/data/tokenizers/character_tokenizer_test.py

Class Name: TestCharacterTokenizer

Method Name: test_handles_byte_encoding