52d69d629e9083fdd4e5ff14e2c52d727bfd9335,nala/preprocessing/tokenizers.py,NLTKTokenizer,tokenize,#NLTKTokenizer#Any#,26

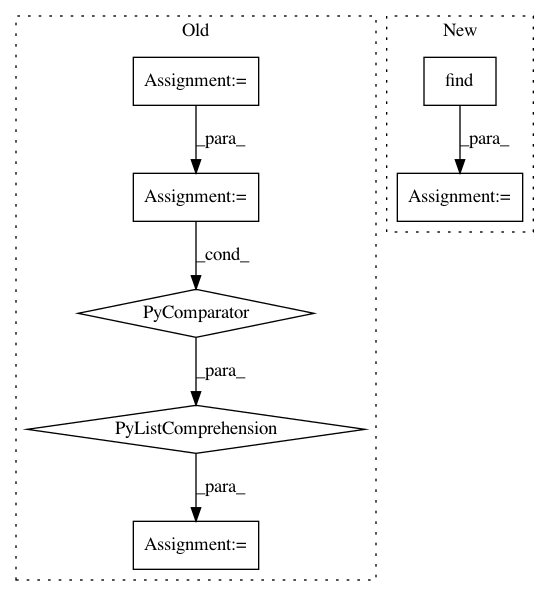

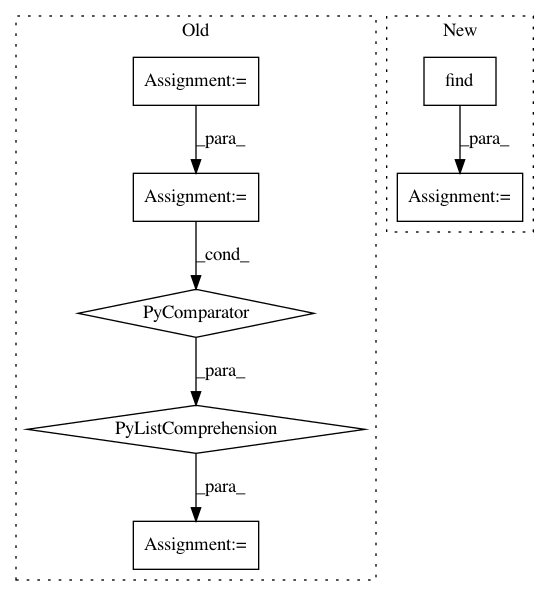

Before Change

:type dataset: nala.structures.data.Dataset

for part in dataset.parts():

part.sentences = [[Token(word) for word in word_tokenize(sentence)] for sentence in part.sentences]

class TmVarTokenizer(Tokenizer):

After Change

for index, sentence in enumerate(part.sentences):

part.sentences[index] = []

for token_word in word_tokenize(sentence):

token_start = part.text.find(token_word, so_far)

so_far = token_start + len(token_word)

part.sentences[index].append(Token(token_word, token_start))

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: Rostlab/nalaf

Commit Name: 52d69d629e9083fdd4e5ff14e2c52d727bfd9335

Time: 2015-08-28

Author: aleksandar.bojchevski@gmail.com

File Name: nala/preprocessing/tokenizers.py

Class Name: NLTKTokenizer

Method Name: tokenize

Project Name: nltk/nltk

Commit Name: 0bcc8da0344cddc9dfff82a788df519c19489500

Time: 2017-10-17

Author: lyyb46@gmail.com

File Name: nltk/tokenize/treebank.py

Class Name: TreebankWordTokenizer

Method Name: span_tokenize

Project Name: Rostlab/nalaf

Commit Name: 52d69d629e9083fdd4e5ff14e2c52d727bfd9335

Time: 2015-08-28

Author: aleksandar.bojchevski@gmail.com

File Name: nala/preprocessing/tokenizers.py

Class Name: NLTKTokenizer

Method Name: tokenize

Project Name: aertslab/pySCENIC

Commit Name: 6d6a32dd677aa6097c4e77b359f81989c3e949af

Time: 2018-04-05

Author: vandesande.bram@gmail.com

File Name: src/pyscenic/rnkdb.py

Class Name:

Method Name: build_rankings