d47067937abacfe87f2963adca8daeada3c631fe,fairseq/optim/fp16_optimizer.py,_FP16OptimizerMixin,_sync_fp16_grads_to_fp32,#_FP16OptimizerMixin#,79

Before Change

if self.has_flat_params:

offset = 0

for p in self.fp16_params:

if not p.requires_grad:

continue

grad_data = p.grad.data if p.grad is not None else p.data.new_zeros(p.data.shape)

numel = grad_data.numel()

self.fp32_params.grad.data[offset:offset+numel].copy_(grad_data.view(-1))

offset += numelAfter Change

if self._needs_sync:

// copy FP16 grads to FP32

if self.has_flat_params:

devices = list(self.fp32_params.keys())

device_params_dict = defaultdict(list)

for p in self.fp16_params:

if p.requires_grad:

device_params_dict[p.device.index].append(p)

for device in devices:

device_params = device_params_dict[device]

offset = 0

for p in device_params:

grad_data = p.grad.data if p.grad is not None else p.data.new_zeros(p.data.shape)

numel = grad_data.numel()

self.fp32_params[device].grad.data[offset:offset+numel].copy_(grad_data.view(-1))

offset += numel

else:

for p, p32 in zip(self.fp16_params, self.fp32_params):

if not p.requires_grad:

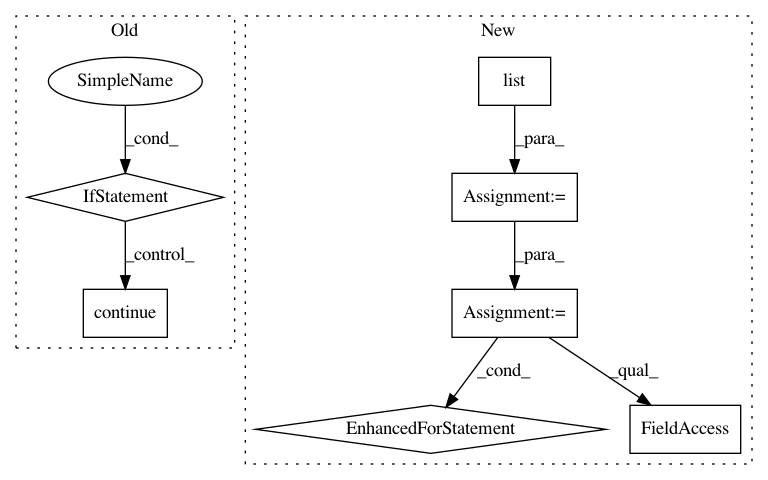

continueIn pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances Project Name: pytorch/fairseq

Commit Name: d47067937abacfe87f2963adca8daeada3c631fe

Time: 2020-09-10

Author: bhosale.shruti18@gmail.com

File Name: fairseq/optim/fp16_optimizer.py

Class Name: _FP16OptimizerMixin

Method Name: _sync_fp16_grads_to_fp32

Project Name: pytorch/fairseq

Commit Name: d47067937abacfe87f2963adca8daeada3c631fe

Time: 2020-09-10

Author: bhosale.shruti18@gmail.com

File Name: fairseq/optim/fp16_optimizer.py

Class Name: _FP16OptimizerMixin

Method Name: _sync_fp32_params_to_fp16

Project Name: mil-tokyo/webdnn

Commit Name: 2571186c26968de784585bdabf0c0979e9608a85

Time: 2017-04-20

Author: y.kikura@gmail.com

File Name: src/graph_builder/optimizer/util.py

Class Name:

Method Name: listup_operator_in_order