4296a765125fff6491892a1bb70fb32ac516dae6,ch15/01_train_a2c.py,,,#,54

Before Change

adv_v = vals_ref_v.unsqueeze(dim=-1) - value_v.detach()

log_prob_v = adv_v * calc_logprob(mu_v, var_v, actions_v)

loss_policy_v = -log_prob_v.mean()

entropy_loss_v = ENTROPY_BETA * (-(torch.log(2*math.pi*var_v) + 1)/2).mean()

loss_v = loss_policy_v + entropy_loss_v + loss_value_v

loss_v.backward()

optimizer.step()

tb_tracker.track("advantage", adv_v, step_idx)

After Change

test_env = gym.make(ENV_ID)

net_act = model.ModelActor(envs[0].observation_space.shape[0], envs[0].action_space.shape[0])

net_crt = model.ModelCritic(envs[0].observation_space.shape[0])

if args.cuda:

net_act.cuda()

net_crt.cuda()

print(net_act)

print(net_crt)

writer = SummaryWriter(comment="-a2c_" + args.name)

agent = model.AgentA2C(net_act, cuda=args.cuda)

exp_source = ptan.experience.ExperienceSourceFirstLast(envs, agent, GAMMA, steps_count=REWARD_STEPS)

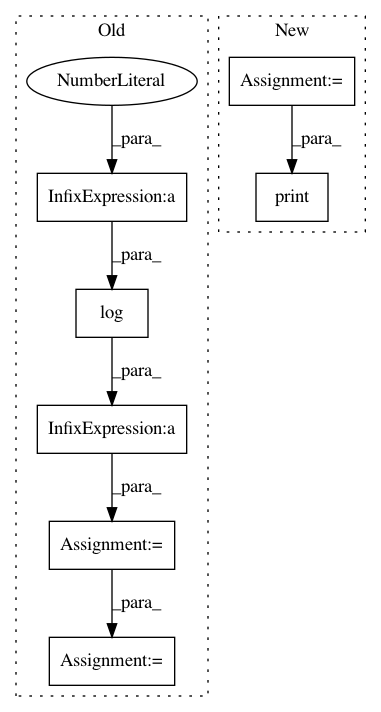

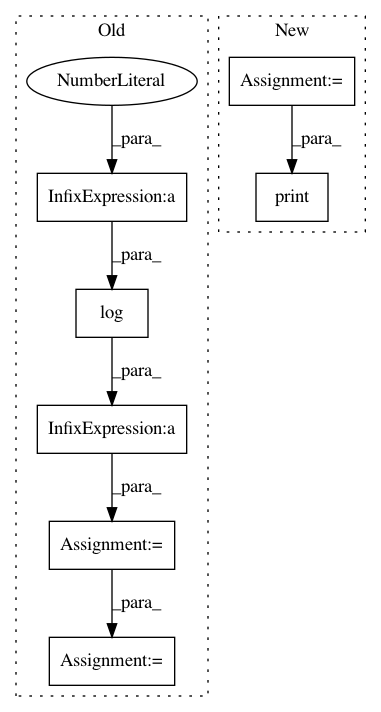

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 4296a765125fff6491892a1bb70fb32ac516dae6

Time: 2018-02-10

Author: max.lapan@gmail.com

File Name: ch15/01_train_a2c.py

Class Name:

Method Name:

Project Name: commonsense/conceptnet5

Commit Name: c7c81cdf7b8648f7f5347a7940c846b3042a098e

Time: 2014-02-24

Author: rob@luminoso.com

File Name: conceptnet5/builders/combine_assertions.py

Class Name:

Method Name: output_assertion

Project Name: PacktPublishing/Deep-Reinforcement-Learning-Hands-On

Commit Name: 99abcc6e9b57f441999ce10dbc31ca1bed79c356

Time: 2018-02-10

Author: max.lapan@gmail.com

File Name: ch15/04_train_ppo.py

Class Name:

Method Name: