145170ca9bbd89aa01d8a40841e3c039d3683af8,stellargraph/layer/graph_attention.py,GraphAttention,call,#GraphAttention#Any#,211

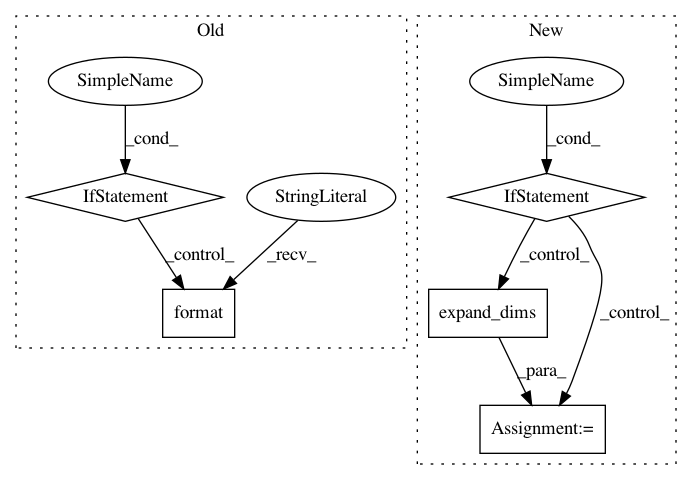

Before Change

// For the GAT model to match that in the paper, we need to ensure that the graph has self-loops,

// since the neighbourhood of node i in eq. (4) includes node i itself.

// Adding self-loops to A via setting the diagonal elements of A to 1.0:

if kwargs.get("add_self_loops", False):

// get the number of nodes from inputs[1] directly

N = K.int_shape(inputs[1])[-1]

if N is not None:

// create self-loops

A = tf.linalg.set_diag(A, K.cast(np.ones((N,)), dtype="float"))

else:

raise ValueError(

"{}: need to know number of nodes to add self-loops; obtained None instead".format(

type(self).__name__

)

)

outputs = []

for head in range(self.attn_heads):

kernel = self.kernels[head] // W in the paper (F x F")

attention_kernel = self.attn_kernels[

After Change

// Add batch dimension back if we removed it

print("BATCH DIM:", batch_dim)

if batch_dim == 1:

output = K.expand_dims(output, 0)

return output

class GAT:

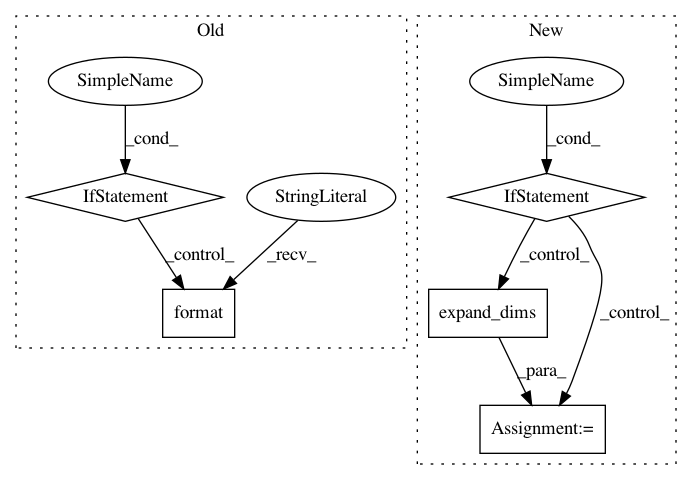

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 5

Instances

Project Name: stellargraph/stellargraph

Commit Name: 145170ca9bbd89aa01d8a40841e3c039d3683af8

Time: 2019-06-03

Author: andrew.docherty@data61.csiro.au

File Name: stellargraph/layer/graph_attention.py

Class Name: GraphAttention

Method Name: call

Project Name: keras-team/keras

Commit Name: 9bc2e60fd587389701c077f5bbff69250d6fb0b1

Time: 2016-09-07

Author: kuza55@gmail.com

File Name: keras/callbacks.py

Class Name: TensorBoard

Method Name: _set_model

Project Name: sony/nnabla

Commit Name: a6f63f9d910d93d5cf3a2d016a0ed2b925f9eb9f

Time: 2018-11-19

Author: Akio.Hayakawa@sony.com

File Name: python/src/nnabla/utils/image_utils/cv2_utils.py

Class Name:

Method Name: imresize