161ae41bebc73c146627169f761e3c4ddf83e5d4,pixyz/losses/losses.py,Divergence,__init__,#Divergence#Any#Any#Any#,237

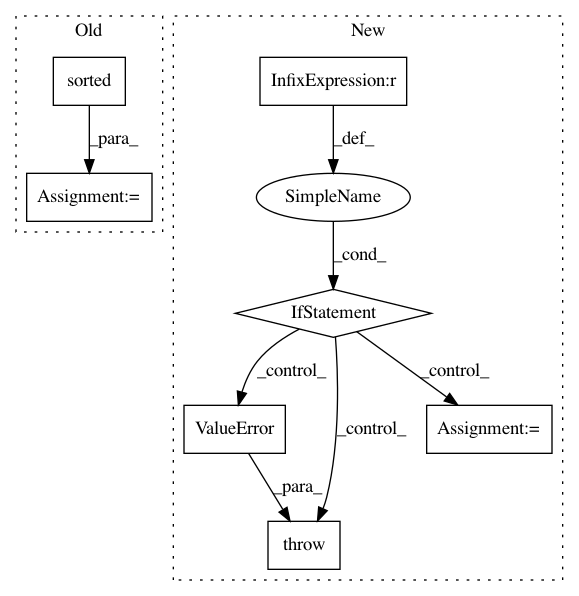

Before Change

_input_var = deepcopy(p.input_var)

if q is not None:

_input_var += deepcopy(q.input_var)

_input_var = sorted(set(_input_var), key=_input_var.index)

super().__init__(_input_var)

self.p = p

self.q = q

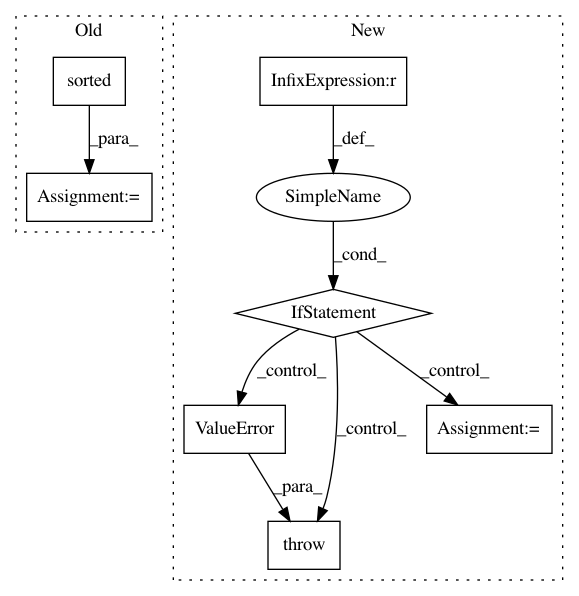

After Change

>>> p = Generator()

>>> q = Inference()

>>> prior = Normal(loc=torch.tensor(0.), scale=torch.tensor(1.),

... var=["z"], features_shape=[64], name="p_{prior}")

...

>>> // Define a loss function (VAE)

>>> reconst = -p.log_prob().expectation(q)

>>> kl = KullbackLeibler(q,prior)

>>> loss_cls = (reconst - kl).mean()

>>> print(loss_cls)

mean \\left(- D_{KL} \\left[q(z|x)||p_{prior}(z) \\right] - \\mathbb{E}_{q(z|x)} \\left[\\log p(x|z) \\right] \\right)

>>> // Evaluate this loss function

>>> data = torch.randn(1, 128) // Pseudo data

>>> loss = loss_cls.eval({"x": data})

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: masa-su/pixyz

Commit Name: 161ae41bebc73c146627169f761e3c4ddf83e5d4

Time: 2020-10-26

Author: kaneko@weblab.t.u-tokyo.ac.jp

File Name: pixyz/losses/losses.py

Class Name: Divergence

Method Name: __init__

Project Name: pfnet-research/chainer-chemistry

Commit Name: 30376a1d866a81289a50d973f9e6871b14cf5b8c

Time: 2018-07-02

Author: mottodora@gmail.com

File Name: chainer_chemistry/dataset/splitters/scaffold_splitter.py

Class Name: ScaffoldSplitter

Method Name: _split

Project Name: tensorflow/transform

Commit Name: fdb21ea28fc513f118e1a8b06069f84c3f4c23bc

Time: 2019-09-19

Author: zoy@google.com

File Name: tensorflow_transform/beam/analyzer_cache.py

Class Name: WriteAnalysisCacheToFS

Method Name: expand