554a2bff60820efb49ae777b02bf4bd9a71603e2,paysage/models/tap_machine.py,TAP_rbm,gradient,#TAP_rbm#Any#Any#,242

Before Change

m = self.persistent_samples[s]

// Compute the gradients at this minimizing magnetization

m_v_quad = m.v - be.square(m.v)

m_h_quad = m.h - be.square(m.h)

dw_EMF = -be.outer(m.v, m.h) - be.multiply(w, be.outer(m_v_quad, m_h_quad))

da_EMF = -m.v

db_EMF = -m.h

// compute average grad_F_marginal over the minibatch

After Change

grad_a_gamma = self.grad_a_gamma_MF

grad_b_gamma = self.grad_b_gamma_MF

grad_w_gamma = self.grad_w_gamma_MF

elif self.terms == 2:

grad_a_gamma = self.grad_a_gamma_TAP2

grad_b_gamma = self.grad_b_gamma_TAP2

grad_w_gamma = self.grad_w_gamma_TAP2

elif self.terms == 3:

grad_a_gamma = self.grad_a_gamma_TAP3

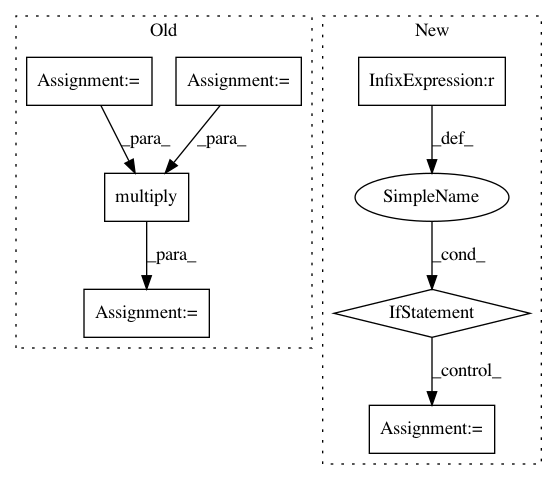

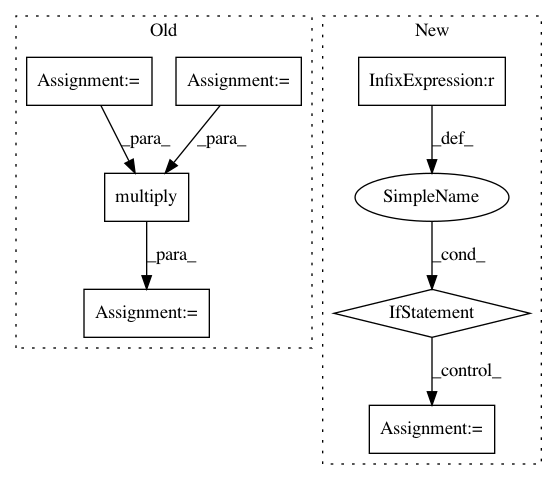

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: drckf/paysage

Commit Name: 554a2bff60820efb49ae777b02bf4bd9a71603e2

Time: 2017-04-20

Author: geminatea@gmail.com

File Name: paysage/models/tap_machine.py

Class Name: TAP_rbm

Method Name: gradient

Project Name: tensorflow/models

Commit Name: 570d9a2b06fd6269c930d7fddf38bc60b212ebee

Time: 2020-07-21

Author: hongkuny@google.com

File Name: official/nlp/modeling/layers/attention.py

Class Name: CachedAttention

Method Name: call

Project Name: tensorflow/models

Commit Name: 36101ab4095065a4196ff4f6437e94f0d91df4e9

Time: 2020-07-21

Author: hongkuny@google.com

File Name: official/nlp/modeling/layers/attention.py

Class Name: CachedAttention

Method Name: call