eac38dbe9694bdfa6c2050528d8cc6a64747e933,pymanopt/autodiff/backends/_autograd.py,_AutogradBackend,compute_gradient,#_AutogradBackend#Any#Any#,38

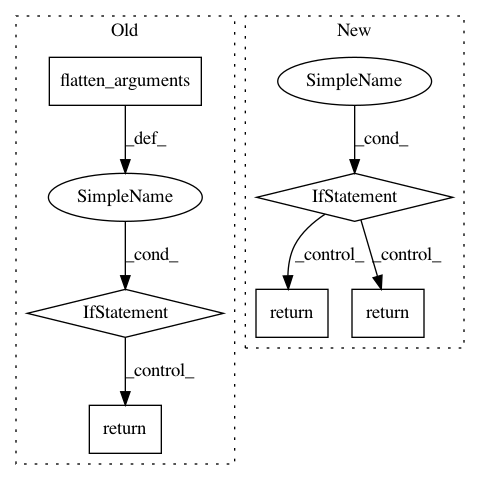

Before Change

@Backend._assert_backend_available

def compute_gradient(self, function, arguments):

flattened_arguments = flatten_arguments(arguments)

if len(flattened_arguments) == 1:

return autograd.grad(function)

// XXX: This path handles cases where the signature hint looks like

// "@Autograd(("x", "y"))". This is potentially unnecessary as

// tests also pass if we instead use "@Autograd". Revisit this

// once we ported more complicated examples to autograd.

if len(arguments) == 1:

@functools.wraps(function)

def unary_function(arguments):

return function(*arguments)

return autograd.grad(unary_function)

// Turn `function` into a function accepting a single argument which

// gets unpacked when the function is called. This is necessary for

// autograd to compute and return the gradient for each input in the

// input tuple/list and return it in the same grouping.

// In order to unpack arguments correctly, we also need a signature hint

// in the form of `arguments`. This is because autograd wraps tuples and

// lists in `SequenceBox` types which are not derived from tuple or list

// so we cannot detect nested arguments automatically.

unary_function = unpack_arguments(function, signature=arguments)

return autograd.grad(unary_function)

@staticmethod

def _compute_nary_hessian_vector_product(function):

gradient = autograd.grad(function)

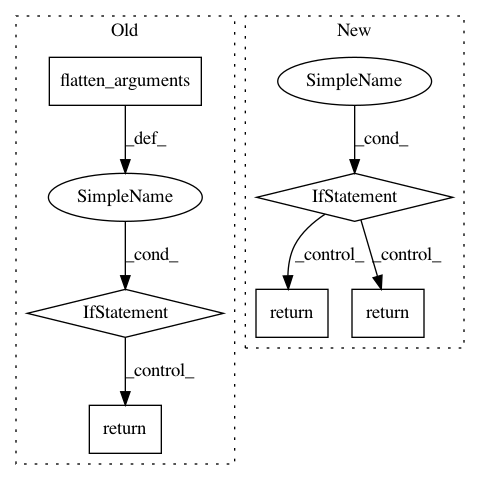

After Change

def compute_gradient(self, function, arguments):

num_arguments = len(arguments)

gradient = autograd.grad(function, argnum=list(range(num_arguments)))

if num_arguments > 1:

return gradient

return self._unpack_return_value(gradient)

@Backend._assert_backend_available

def compute_hessian_vector_product(self, function, arguments):

num_arguments = len(arguments)

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 6

Instances

Project Name: pymanopt/pymanopt

Commit Name: eac38dbe9694bdfa6c2050528d8cc6a64747e933

Time: 2020-02-01

Author: niklas.koep@gmail.com

File Name: pymanopt/autodiff/backends/_autograd.py

Class Name: _AutogradBackend

Method Name: compute_gradient

Project Name: pymanopt/pymanopt

Commit Name: 818492efd4238bd8fedcff105bd46044a714f762

Time: 2020-02-01

Author: niklas.koep@gmail.com

File Name: pymanopt/autodiff/backends/_pytorch.py

Class Name: _PyTorchBackend

Method Name: compute_gradient

Project Name: pymanopt/pymanopt

Commit Name: 4a28bbf9659d96e15f0f241bcab76381e299097c

Time: 2020-02-01

Author: niklas.koep@gmail.com

File Name: pymanopt/autodiff/backends/_tensorflow.py

Class Name: _TensorFlowBackend

Method Name: compute_gradient