f75a44cac3ae7bfc8810bad5127854a131d48a9c,reagent/ope/estimators/contextual_bandits_estimators.py,DoublyRobustEstimator,evaluate,#DoublyRobustEstimator#Any#,257

Before Change

gt_avg = RunningAverage()

for sample in input.samples:

log_avg.add(sample.log_reward)

weight = (

sample.tgt_action_probabilities[sample.log_action]

/ sample.log_action_probabilities[sample.log_action]

)

weight = self._weight_clamper(weight)

dm_action_reward, dm_reward = self._calc_dm_reward(

input.action_space, sample

)

tgt_avg.add((sample.log_reward - dm_action_reward) * weight + dm_reward)

gt_avg.add(sample.ground_truth_reward)

return EstimatorResult(

log_avg.average, tgt_avg.average, gt_avg.average, tgt_avg.count

)

After Change

log_avg = RunningAverage()

logged_vals = []

tgt_avg = RunningAverage()

tgt_vals = []

gt_avg = RunningAverage()

for sample in input.samples:

log_avg.add(sample.log_reward)

logged_vals.append(sample.log_reward)

dm_action_reward, dm_reward = self._calc_dm_reward(

input.action_space, sample

)

tgt_result = 0.0

weight = 0.0

if sample.log_action is not None:

weight = (

0.0

if sample.log_action_probabilities[sample.log_action]

< PROPENSITY_THRESHOLD

else sample.tgt_action_probabilities[sample.log_action]

/ sample.log_action_probabilities[sample.log_action]

)

weight = self._weight_clamper(weight)

assert dm_action_reward is not None

assert dm_reward is not None

tgt_result += (

sample.log_reward - dm_action_reward

) * weight + dm_reward

else:

tgt_result = dm_reward

tgt_avg.add(tgt_result)

tgt_vals.append(tgt_result)

gt_avg.add(sample.ground_truth_reward)

(

tgt_score,

tgt_score_normalized,

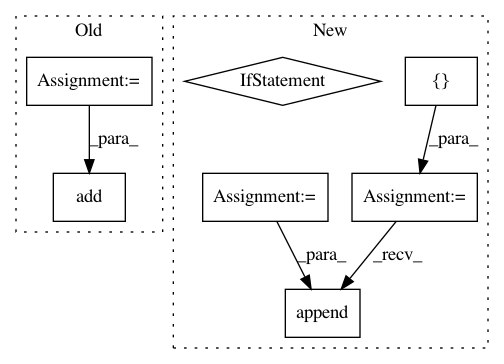

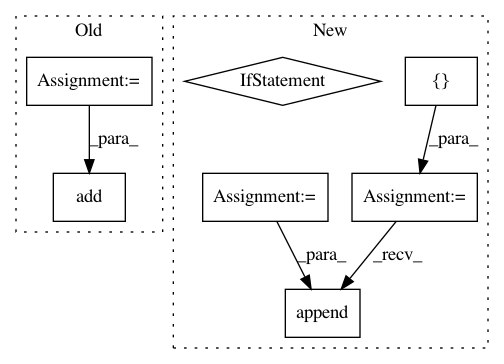

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: facebookresearch/Horizon

Commit Name: f75a44cac3ae7bfc8810bad5127854a131d48a9c

Time: 2020-06-30

Author: alexschneidman@fb.com

File Name: reagent/ope/estimators/contextual_bandits_estimators.py

Class Name: DoublyRobustEstimator

Method Name: evaluate

Project Name: shenweichen/DeepCTR

Commit Name: 8182ea386e6529a1a2294d8e2d33fc040d0cbfb2

Time: 2019-07-21

Author: wcshen1994@163.com

File Name: deepctr/inputs.py

Class Name:

Method Name: get_linear_logit

Project Name: facebookresearch/Horizon

Commit Name: f75a44cac3ae7bfc8810bad5127854a131d48a9c

Time: 2020-06-30

Author: alexschneidman@fb.com

File Name: reagent/ope/estimators/contextual_bandits_estimators.py

Class Name: DoublyRobustEstimator

Method Name: evaluate

Project Name: IndicoDataSolutions/finetune

Commit Name: 5b1cfa22c1dc08e92f8a21311728397cfb84ffe1

Time: 2018-08-22

Author: madison@indico.io

File Name: finetune/utils.py

Class Name:

Method Name: finetune_to_indico_sequence