78eba7b3f82b8420deac3cd28318dbfead0f9b9e,python/baseline/pytorch/seq2seq/model.py,Seq2SeqModel,encode,#Seq2SeqModel#Any#Any#,160

Before Change

embed_in_seq = self.embed(input)

//if self.training:

packed = torch.nn.utils.rnn.pack_padded_sequence(embed_in_seq, src_len.data.tolist())

output_tbh, hidden = self.encoder_rnn(packed)

output_tbh, _ = torch.nn.utils.rnn.pad_packed_sequence(output_tbh)

//else:

// output_tbh, hidden = self.encoder_rnn(embed_in_seq)

return output_tbh, hidden

def decoder(self, context_tbh, h_i, output_i, dst, src_mask):

embed_out_tbh = self.tgt_embedding(dst)

context_bth = context_tbh.transpose(0, 1)

After Change

lengths = lengths.cuda()

example["src_len"] = lengths

for key in self.src_embeddings.keys():

tensor = torch.from_numpy(batch_dict[key])

tensor = tensor[perm_idx]

example[key] = tensor

if self.gpu:

example[key] = example[key].cuda()

if "tgt" in batch_dict:

tgt = torch.from_numpy(batch_dict["tgt"])

example["dst"] = tgt[:, :-1]

example["tgt"] = tgt[:, 1:]

example["dst"] = example["dst"][perm_idx]

example["tgt"] = example["tgt"][perm_idx]

if self.gpu:

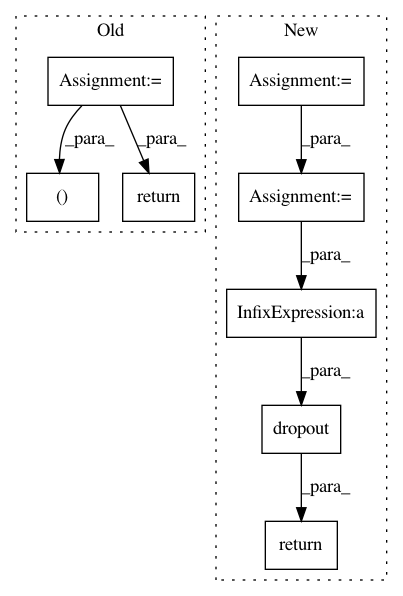

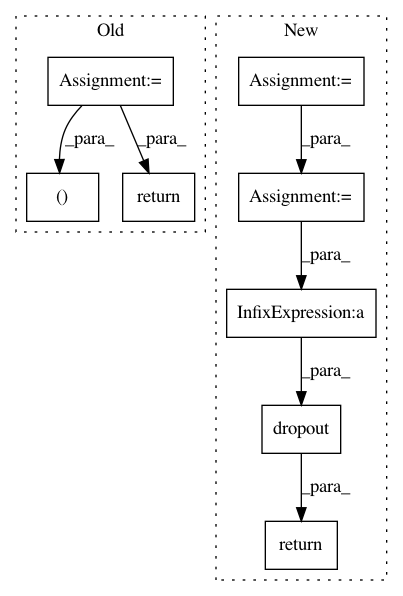

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 8

Instances

Project Name: dpressel/mead-baseline

Commit Name: 78eba7b3f82b8420deac3cd28318dbfead0f9b9e

Time: 2018-10-30

Author: dpressel@gmail.com

File Name: python/baseline/pytorch/seq2seq/model.py

Class Name: Seq2SeqModel

Method Name: encode

Project Name: dpressel/mead-baseline

Commit Name: 51498b09368a61bfb06849693688df6eb54d4787

Time: 2019-11-14

Author: blester125@gmail.com

File Name: python/eight_mile/tf/embeddings.py

Class Name: PositionalCharConvEmbeddings

Method Name: encode

Project Name: dpressel/mead-baseline

Commit Name: 51498b09368a61bfb06849693688df6eb54d4787

Time: 2019-11-14

Author: blester125@gmail.com

File Name: python/eight_mile/tf/embeddings.py

Class Name: PositionalLookupTableEmbeddings

Method Name: encode