faf3aa876462323f2fa721ebd633752d6489808f,sru/modules.py,SRU,forward,#SRU#Any#Any#Any#,536

Before Change

device=batch_sizes.device).expand(length, batch_size)

mask_pad = (mask_pad >= batch_sizes.view(length, 1)).contiguous()

else:

length = input.size(0)

batch_size = input.size(1)

batch_sizes = None

sorted_indices = None

unsorted_indices = None

After Change

orig_input = input

if isinstance(orig_input, PackedSequence):

input, lengths = nn.utils.rnn.pad_packed_sequence(input)

max_length = lengths.max().item()

mask_pad = torch.ByteTensor([[0] * l + [1] * (max_length - l) for l in lengths.tolist()])

mask_pad = mask_pad.to(input.device).transpose(0, 1).contiguous()

// The dimensions of `input` should be: `(sequence_length, batch_size, input_size)`.

if input.dim() != 3:

raise ValueError("There must be 3 dimensions for (length, batch_size, input_size)")

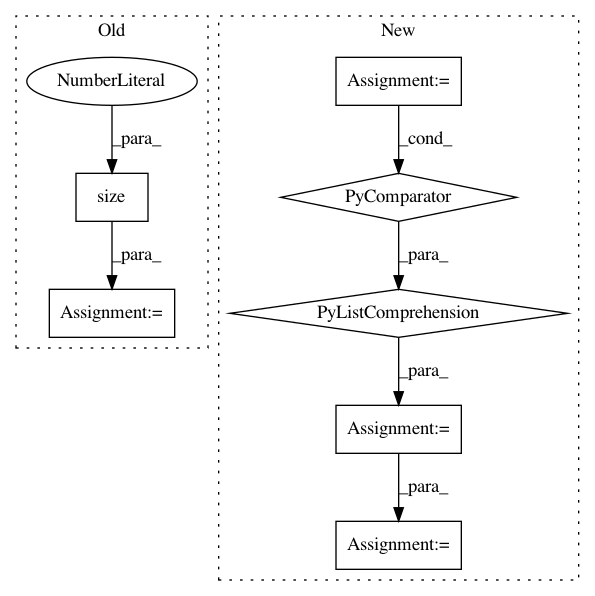

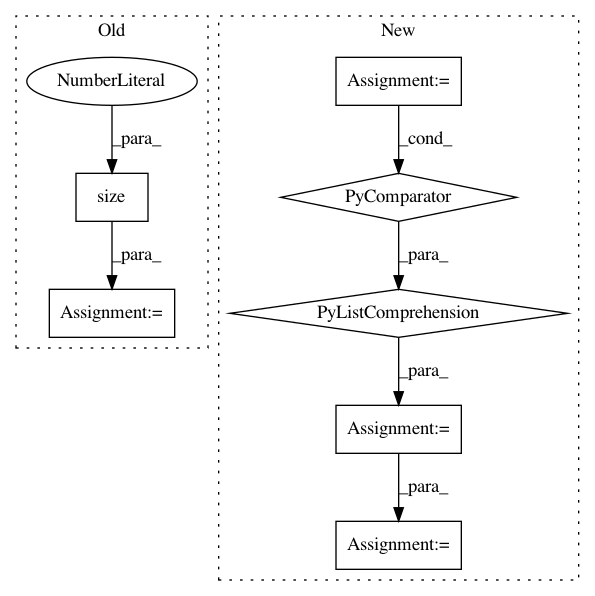

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 7

Instances

Project Name: asappresearch/sru

Commit Name: faf3aa876462323f2fa721ebd633752d6489808f

Time: 2020-09-18

Author: taolei@csail.mit.edu

File Name: sru/modules.py

Class Name: SRU

Method Name: forward

Project Name: cornellius-gp/gpytorch

Commit Name: 91b0d220c8e816766fd4565e1d2f5115d3afbefe

Time: 2018-10-12

Author: gpleiss@gmail.com

File Name: gpytorch/utils/cholesky.py

Class Name:

Method Name: batch_potrs

Project Name: cornellius-gp/gpytorch

Commit Name: 91b0d220c8e816766fd4565e1d2f5115d3afbefe

Time: 2018-10-12

Author: gpleiss@gmail.com

File Name: gpytorch/utils/cholesky.py

Class Name:

Method Name: batch_potrf