071b27b1ffc1c38e84f64fafcc126fafa54369a1,cistar-dev/cistar/envs/loop_accel.py,SimpleAccelerationEnvironment,compute_reward,#SimpleAccelerationEnvironment#Any#Any#,54

Before Change

return max(max_cost - cost, 0)

elif reward_type == "distance":

distance = np.array([self.vehicles[veh_id]["absolute_position"] - self.initial_pos[veh_id]

for veh_id in self.ids])

return sum(distance)

def getState(self):After Change

See parent class

vel = state[0]

if any(vel < -100) or kwargs["fail"]:

return 0.0

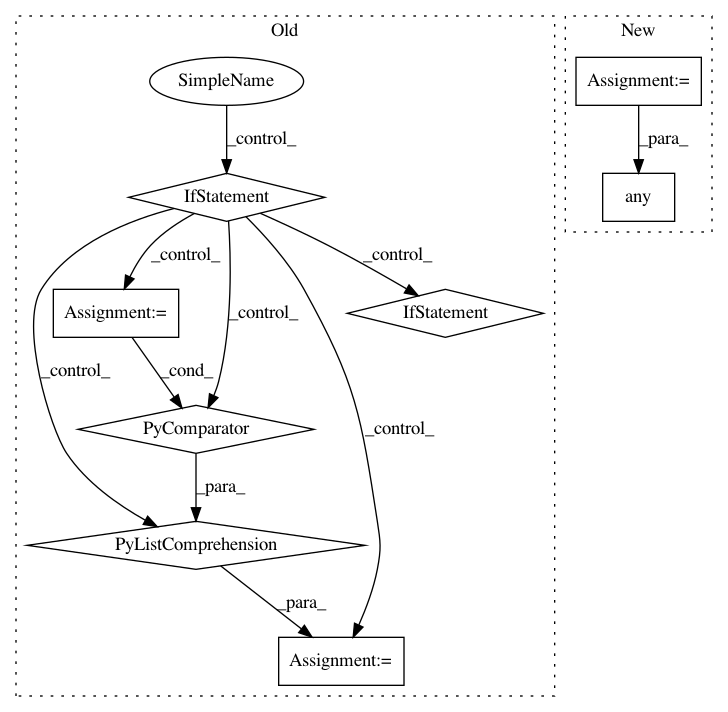

max_cost = np.array([self.env_params["target_velocity"]]*self.scenario.num_vehicles)In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 8

Instances Project Name: flow-project/flow

Commit Name: 071b27b1ffc1c38e84f64fafcc126fafa54369a1

Time: 2017-07-05

Author: akreidieh@gmail.com

File Name: cistar-dev/cistar/envs/loop_accel.py

Class Name: SimpleAccelerationEnvironment

Method Name: compute_reward

Project Name: scikit-learn-contrib/DESlib

Commit Name: f0c15f219b0761b14329ddd416cda82fa4bae841

Time: 2018-03-28

Author: rafaelmenelau@gmail.com

File Name: deslib/dcs/mcb.py

Class Name: MCB

Method Name: estimate_competence

Project Name: mathics/Mathics

Commit Name: e56f91c9b60f561712d28faae3e4d047adc67760

Time: 2016-09-14

Author: Bernhard.Liebl@gmx.org

File Name: mathics/builtin/importexport.py

Class Name: Import

Method Name: apply