10b7c76231fe2fd1ee0ab5260ec67a424c37a1a9,torchsample/modules/super_module.py,SuperModule,fit,#SuperModule#Any#Any#Any#Any#Any#Any#Any#Any#Any#,112

Before Change

callbacks.on_train_begin()

nb_batches = int(math.ceil(inputs[0].size(0) / batch_size))

for epoch_idx in range(nb_epoch):

epoch_logs = {

"nb_batches": nb_batches,

"nb_epoch": nb_epoch,

"has_validation_data": has_validation_data

}

callbacks.on_epoch_begin(epoch_idx, epoch_logs)

for batch_idx in range(nb_batches):

batch_logs = {"batch_idx": batch_idx}

callbacks.on_batch_begin(batch_idx, batch_logs)

input_batch = [Variable(x[batch_idx*batch_size:(batch_idx+1)*batch_size]) for x in inputs]

if has_target:

target_batch = [Variable(y[batch_idx*batch_size:(batch_idx+1)*batch_size]) for y in targets]

if cuda_device > -1:

input_batch = [ins.cuda(cuda_device) for ins in input_batch]

if has_target:

target_batch = [targs.cuda(cuda_device) for targs in target_batch]

batch_logs["batch_samples"] = len(input_batch[0])

//// ZERO GRAD AND FORWARD PASS

self._optimizer.zero_grad()

outputs = self(*input_batch)

if not isinstance(outputs, (list,tuple)):

outputs = [outputs]

if has_target:

loss = self._loss_fns[0](outputs[0], target_batch[0])

for loss_idx in range(1,nb_targets):

if self._has_multiple_loss_fns:

loss += self._loss_fns[loss_idx](outputs[loss_idx], target_batch[loss_idx])

else:

loss += self._loss_fns[0](outputs[loss_idx], target_batch[loss_idx])

else:

loss = self._loss_fns[0](outputs[0])

for loss_idx in range(1,nb_targets):

if self._has_multiple_loss_fns:

loss += self._loss_fns[loss_idx](outputs[loss_idx])

else:

loss += self._loss_fns[0](outputs[loss_idx])

if self._has_regularizers:

regularizer_loss = regularizers(self)

loss += regularizer_loss

batch_logs["regularizer_loss"] = regularizer_loss

if self._has_constraints and constraints.has_lagrangian:

constraint_loss = constraints(self)

loss += constraint_loss

batch_logs["constraint_loss"] = constraint_loss

batch_logs["loss"] = loss.data[0]

if self._has_metrics:

metric_logs = metrics(outputs[0], target_batch[0])

batch_logs.update(metric_logs)

// BACKWARD PASS AND OPTIMIZER STEP

loss.backward()

self._optimizer.step()

callbacks.on_batch_end(batch_idx, batch_logs)

if self._has_constraints:

constraints.on_batch_end(batch_idx)

if has_validation_data:

val_loss = self.evaluate(*validation_data,

batch_size=batch_size,

cuda_device=cuda_device)

if self._has_metrics:

val_loss, val_metric_logs = val_loss

epoch_logs.update(val_metric_logs)

epoch_logs["val_loss"] = val_loss

self.history.batch_metrics["val_loss"] = val_loss

// END OF EPOCH

epoch_logs.update(self.history.batch_metrics)

if self._has_metrics:

epoch_logs.update(metric_logs)

callbacks.on_epoch_end(epoch_idx, epoch_logs)

if self._has_constraints:

constraints.on_epoch_end(epoch_idx)

if self._has_metrics:

metrics.reset()

if self._stop_training:

break

callbacks.on_train_end()

def fit_loader(self,

loader,

After Change

metrics = MetricsModule(self._metrics)

//// create callbacks

with TQDM() as pbar:

progressbar = []

if verbose > 0:

progressbar = [pbar]

callbacks = CallbackModule(self._callbacks + progressbar)

callbacks.set_model(self)

callbacks.on_train_begin()

nb_batches = int(math.ceil(inputs[0].size(0) / batch_size))

for epoch_idx in range(nb_epoch):

epoch_logs = {

"nb_batches": nb_batches,

"nb_epoch": nb_epoch,

"has_validation_data": has_validation_data

}

callbacks.on_epoch_begin(epoch_idx, epoch_logs)

for batch_idx in range(nb_batches):

batch_logs = {"batch_idx": batch_idx}

callbacks.on_batch_begin(batch_idx, batch_logs)

input_batch = [Variable(x[batch_idx*batch_size:(batch_idx+1)*batch_size]) for x in inputs]

if has_target:

target_batch = [Variable(y[batch_idx*batch_size:(batch_idx+1)*batch_size]) for y in targets]

if cuda_device > -1:

input_batch = [ins.cuda(cuda_device) for ins in input_batch]

if has_target:

target_batch = [targs.cuda(cuda_device) for targs in target_batch]

batch_logs["batch_samples"] = len(input_batch[0])

//// ZERO GRAD AND FORWARD PASS

self._optimizer.zero_grad()

outputs = self(*input_batch)

if not isinstance(outputs, (list,tuple)):

outputs = [outputs]

if has_target:

loss = self._loss_fns[0](outputs[0], target_batch[0])

for loss_idx in range(1,nb_targets):

if self._has_multiple_loss_fns:

loss += self._loss_fns[loss_idx](outputs[loss_idx], target_batch[loss_idx])

else:

loss += self._loss_fns[0](outputs[loss_idx], target_batch[loss_idx])

else:

loss = self._loss_fns[0](outputs[0])

for loss_idx in range(1,nb_targets):

if self._has_multiple_loss_fns:

loss += self._loss_fns[loss_idx](outputs[loss_idx])

else:

loss += self._loss_fns[0](outputs[loss_idx])

if self._has_regularizers:

regularizer_loss = regularizers(self)

loss += regularizer_loss

batch_logs["regularizer_loss"] = regularizer_loss

if self._has_constraints and constraints.has_lagrangian:

constraint_loss = constraints(self)

loss += constraint_loss

batch_logs["constraint_loss"] = constraint_loss

batch_logs["loss"] = loss.data[0]

if self._has_metrics:

metric_logs = metrics(outputs[0], target_batch[0])

batch_logs.update(metric_logs)

// BACKWARD PASS AND OPTIMIZER STEP

loss.backward()

self._optimizer.step()

callbacks.on_batch_end(batch_idx, batch_logs)

if self._has_constraints:

constraints.on_batch_end(batch_idx)

if has_validation_data:

val_loss = self.evaluate(*validation_data,

batch_size=batch_size,

cuda_device=cuda_device)

if self._has_metrics:

val_loss, val_metric_logs = val_loss

epoch_logs.update(val_metric_logs)

epoch_logs["val_loss"] = val_loss

self.history.batch_metrics["val_loss"] = val_loss

// END OF EPOCH

epoch_logs.update(self.history.batch_metrics)

if self._has_metrics:

epoch_logs.update(metric_logs)

callbacks.on_epoch_end(epoch_idx, epoch_logs)

if self._has_constraints:

constraints.on_epoch_end(epoch_idx)

if self._has_metrics:

metrics.reset()

if self._stop_training:

break

callbacks.on_train_end()

def fit_loader(self,

loader,

val_loader=None,

nb_epoch=100,

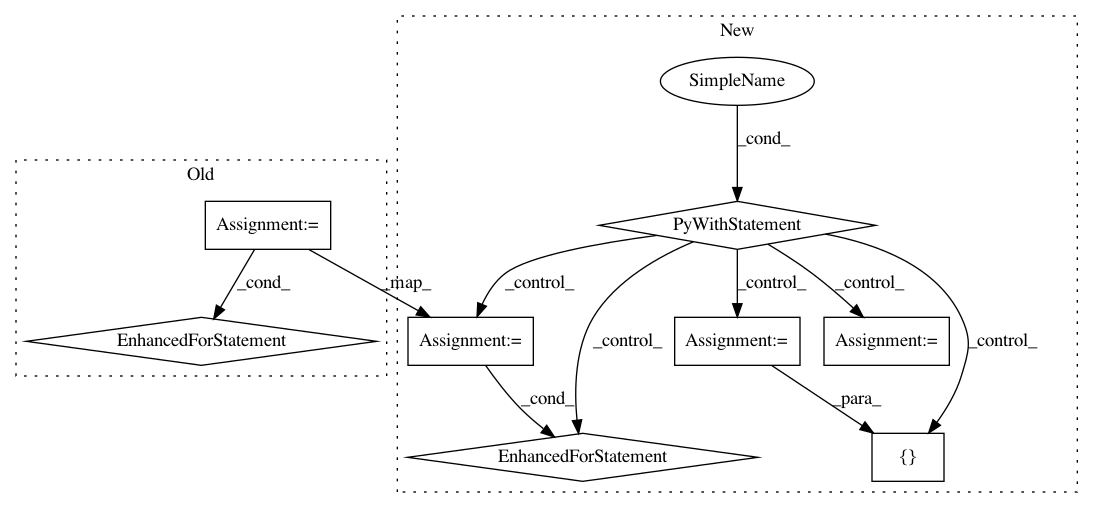

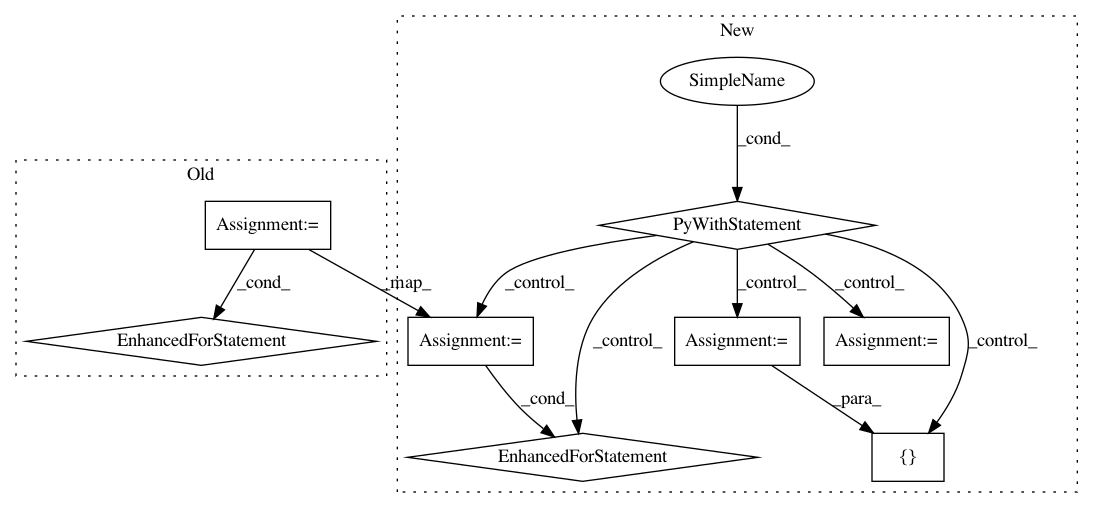

In pattern: SUPERPATTERN

Frequency: 3

Non-data size: 8

Instances

Project Name: ncullen93/torchsample

Commit Name: 10b7c76231fe2fd1ee0ab5260ec67a424c37a1a9

Time: 2017-04-28

Author: ncullen@Nicks-MacBook-Pro.local

File Name: torchsample/modules/super_module.py

Class Name: SuperModule

Method Name: fit

Project Name: pantsbuild/pants

Commit Name: 0093628056bbf454faf35709ee3c61acc133d0e9

Time: 2016-11-05

Author: wisechengyi@gmail.com

File Name: contrib/scrooge/src/python/pants/contrib/scrooge/tasks/thrift_linter.py

Class Name: ThriftLinter

Method Name: execute

Project Name: asyml/texar

Commit Name: cf0cb3360a77041187c655891da4ddffbe4b13dd

Time: 2018-11-27

Author: haoranshi97@gmail.com

File Name: texar/core/optimization.py

Class Name: AdamWeightDecayOptimizer

Method Name: apply_gradients