164dd4dd3df73af90931d0dcc6ccab94956adc32,src/models.py,HeadlessPairAttnEncoder,__init__,#HeadlessPairAttnEncoder#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#Any#,664

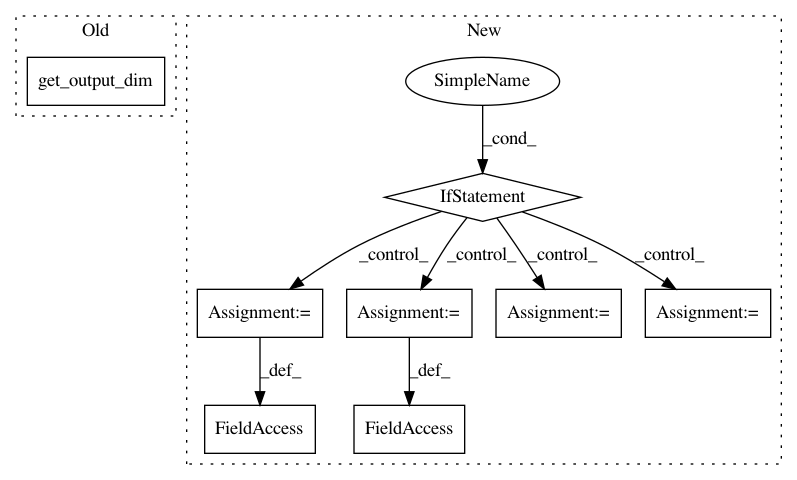

Before Change

encoding_dim))

//if text_field_embedder.get_output_dim() != phrase_layer.get_input_dim():

if (cove_layer is None and text_field_embedder.get_output_dim() != phrase_layer.get_input_dim()) \

or (cove_layer is not None and text_field_embedder.get_output_dim() + 600 != phrase_layer.get_input_dim()):

raise ConfigurationError("The output dimension of the "

"text_field_embedder (embedding_dim + "After Change

initializer=InitializerApplicator(), regularizer=None):

super(HeadlessPairAttnEncoder, self).__init__(vocab)//, regularizer)

if text_field_embedder is None: // just using ELMo embeddings

self._text_field_embedder = lambda x: x

d_emb = 0

self._highway_layer = lambda x: x

else:

self._text_field_embedder = text_field_embedder

d_emb = text_field_embedder.get_output_dim()

self._highway_layer = TimeDistributed(Highway(d_emb, num_highway_layers))

self._phrase_layer = phrase_layer

self._matrix_attention = MatrixAttention(attention_similarity_function)

self._modeling_layer = modeling_layer

self._cove = cove_layerIn pattern: SUPERPATTERN

Frequency: 3

Non-data size: 8

Instances Project Name: jsalt18-sentence-repl/jiant

Commit Name: 164dd4dd3df73af90931d0dcc6ccab94956adc32

Time: 2018-04-08

Author: wang.alex.c@gmail.com

File Name: src/models.py

Class Name: HeadlessPairAttnEncoder

Method Name: __init__

Project Name: allenai/allennlp

Commit Name: 700abc65fd2172a2c6809dd9b72cf50fc2407772

Time: 2020-02-03

Author: mattg@allenai.org

File Name: allennlp/models/decomposable_attention.py

Class Name: DecomposableAttention

Method Name: __init__

Project Name: jsalt18-sentence-repl/jiant

Commit Name: 164dd4dd3df73af90931d0dcc6ccab94956adc32

Time: 2018-04-08

Author: wang.alex.c@gmail.com

File Name: src/models.py

Class Name: HeadlessSentEncoder

Method Name: __init__